Great article/paper on why this isn’t a good idea: https://www.hackerfactor.com/blog/index.php?/archives/1010-C2PAs-Butterfly-Effect.html

That was a great read. I love take downs.

This is tilting at windmills. If someone has physical possession of a piece of hardware, you should assume that it’s been compromised down to the silicon, no matter what clever tricks they’ve tried to stymie hackers with. Also, the analog hole will always exist. Just generate a deepfake and then take a picture of it.

So basically I would just have to screenshot the image or export it to a new file type that doesn’t support their fancy encryption and then I can do whatever I want with the photo?

Ctrl + F “Blockchain”

… Oh?

Well that’s a suprise, a system that actually is comperable to block chain in a different medium doesn’t plaster it everywhere. We’ve certainly seen more use over much much less relevance.

Neat tech. Hope it catches on.

And where do you see any resemblance to a blockchain?

From the article it is just cryptographic signing - once by the camera with its built-in key and once on changes by the CAI tool which has its own key.

Maybe I am misunderstanding here, but what is going to stop anyone from just editing the photo anyway? There will still be a valid certificate attached. You can change the metadata to match the cert details. So… ??

I don’t know about this specific product but in general a digital signature is generated based on the content being signed, so any change to the content will make the signature invalid. It’s the whole point of using a signature.

I was too tired to investigate further last night. That is the case here, sections of data are hashed and used to create the certs:

https://c2pa.org/specifications/specifications/1.3/specs/C2PA_Specification.html#_hard_bindings

Which means that there isn’t a way to edit the photo and have the cert match, and also no way to compress or change the file encoding without invalidating the cert.

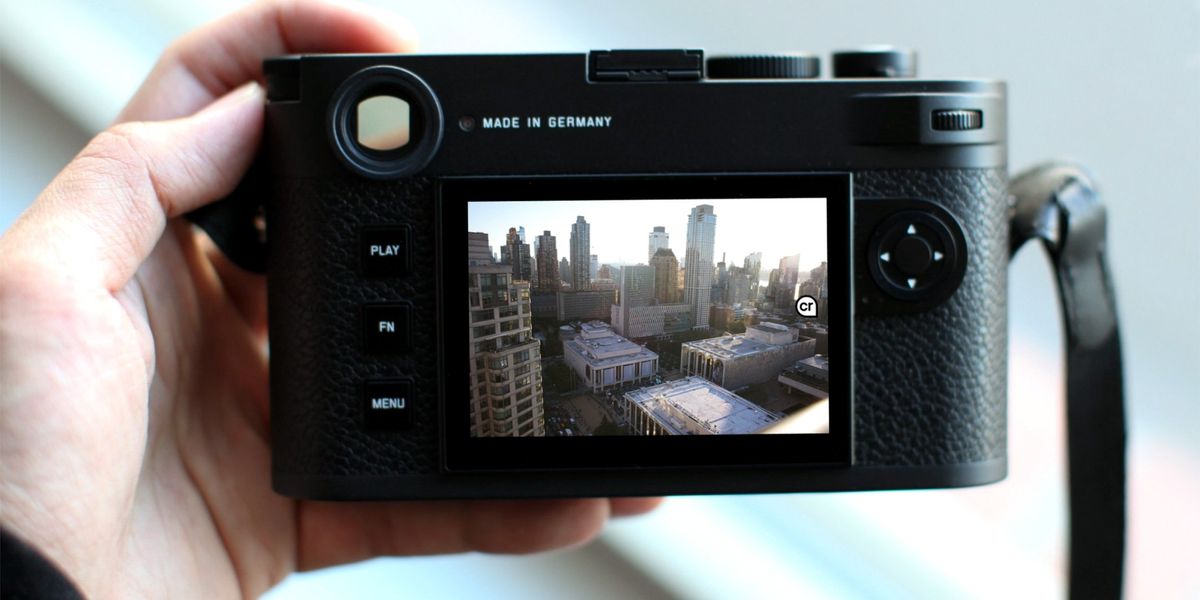

so it’s for jpeg shooters, basically. unfortunately the leica bodies aren’t really known for producing good jpegs.

Ah, DRM for your photos.

Great.

Not at all. From what I understand of this article, it wouldn’t stop you from doing anything you wanted with the image. It just generates a signed certificate at the moment the picture is taken that authenticates that that particular image existed at that particular time. You can copy the image if you like.

This is an adorable show of optimism.

🙄

Digital signatures are not nefarious. Quit freaking out about things just because you don’t understand them.

Forgive the cynicism, but: free, for now.

What happens when the company decides all of a sudden to lock the service behind a subscription pay wall?

Do you still maintain rights to your photos when you use this service?

I have no idea what you’re proposing be “locked behind a subscription pay wall.” The certificate exists and is public from the moment the picture is taken. It can be validated by anyone from that point forward, otherwise it would be pointless. Post the timestamp and the public key on a public blockchain and there’s nothing that can be “taken away” after that.

Your rights to your photos are from your copyright on them. This service shouldn’t affect that. Read the EULA and don’t sign your rights away and there’s no way they can be taken.

I suppose if they are running some kind of identity-verification service they could cut you off from that and prevent future photos you take from being signed after that, but that doesn’t change the past.

What happens is the signature attached to the photo becomes impossible to maintain when the photo is edited, but the photos themselves are no different from any other photo. In other words, just a return to the status quo.

This isn’t DRM. I can’t believe you have so many upvotes for such blatant FUD.

Welcome to

RedditLemmy, where everyone just reads the title and jumps to conclusions based on that

This is cool and all. But I am more concerned about finding a way to prevent my images from being scraped for AI training.

Something like an imperceptible gray grid over the image that would throw off the AI training, and not force people to use certain browsers / apps.

This is awesome, thanks for sharing!

After reading I think it perceptively alters the image, but I’m definitely going to play around with it and see what’s possible.

Beware, this is made by Ben Zhao, the University of Chicago professor who stole open source code for his last data poisoning scheme.

I was wondering when crypto content would become a thing like this.

It’s one of the most obvious uses for it, I’ve suggested this sort of thing many times in threads where people demand “name one actually practical use for blockchains.” Of course so many people have a fundamental hatred of all things blockchain at this point that it’s probably best not to advertise it now. Just say what it can do for you and leave the details in the documentation for people to dig for if they really want to know.