Obviously it’s referring to the 4.54609 litre UK gallon /s

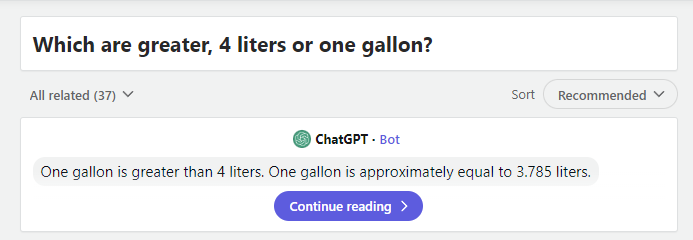

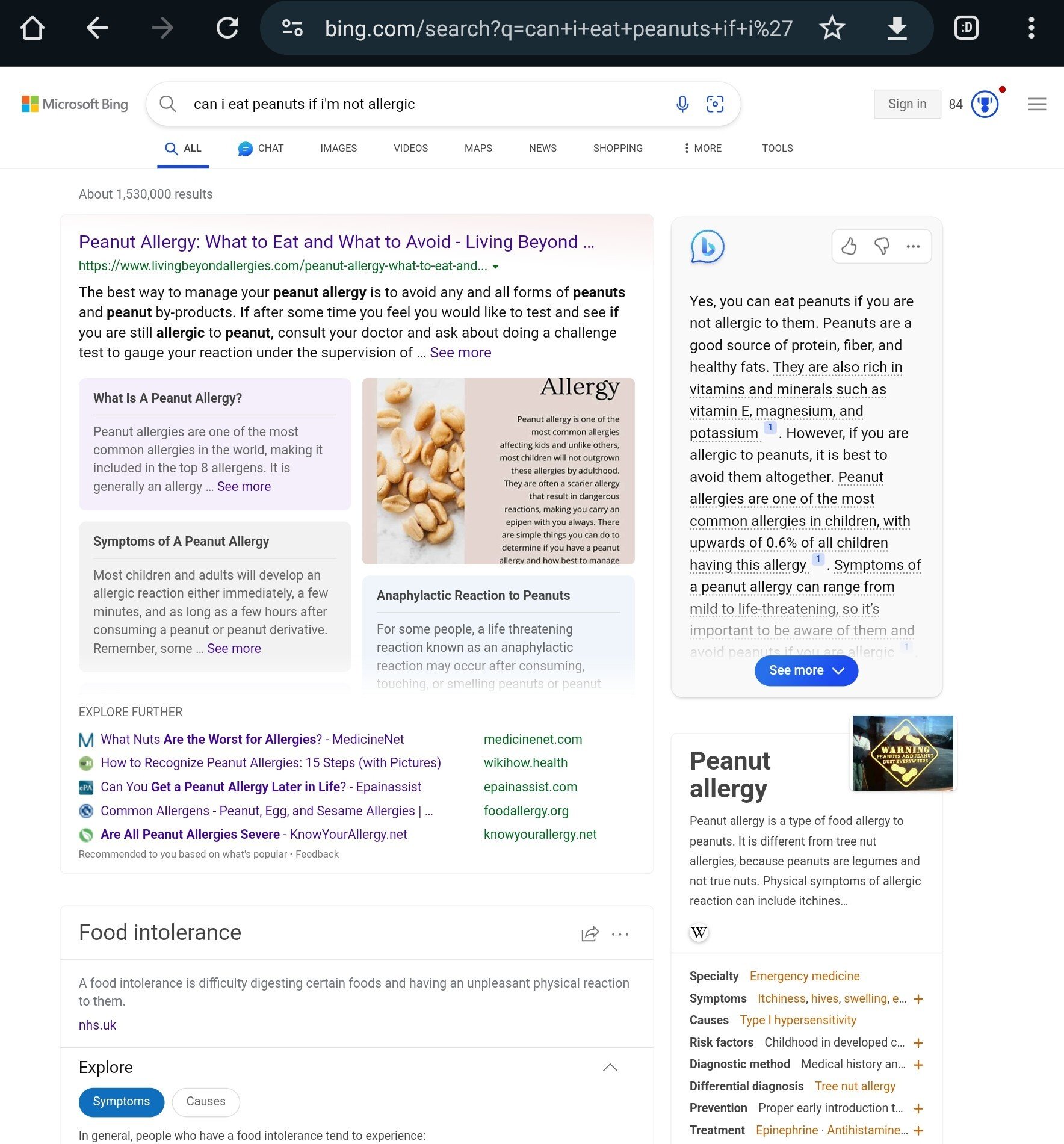

You can see from the green icon that it’s GPT-3.5.

GPT-3.5 really is best described as simply “convincing autocomplete.”

It isn’t until GPT-4 that there were compelling reasoning capabilities including rudimentary spatial awareness (I suspect in part from being a multimodal model).

In fact, it was the jump from a nonsense answer regarding a “stack these items” prompt from 3.5 to a very well structured answer in 4 that blew a lot of minds at Microsoft.

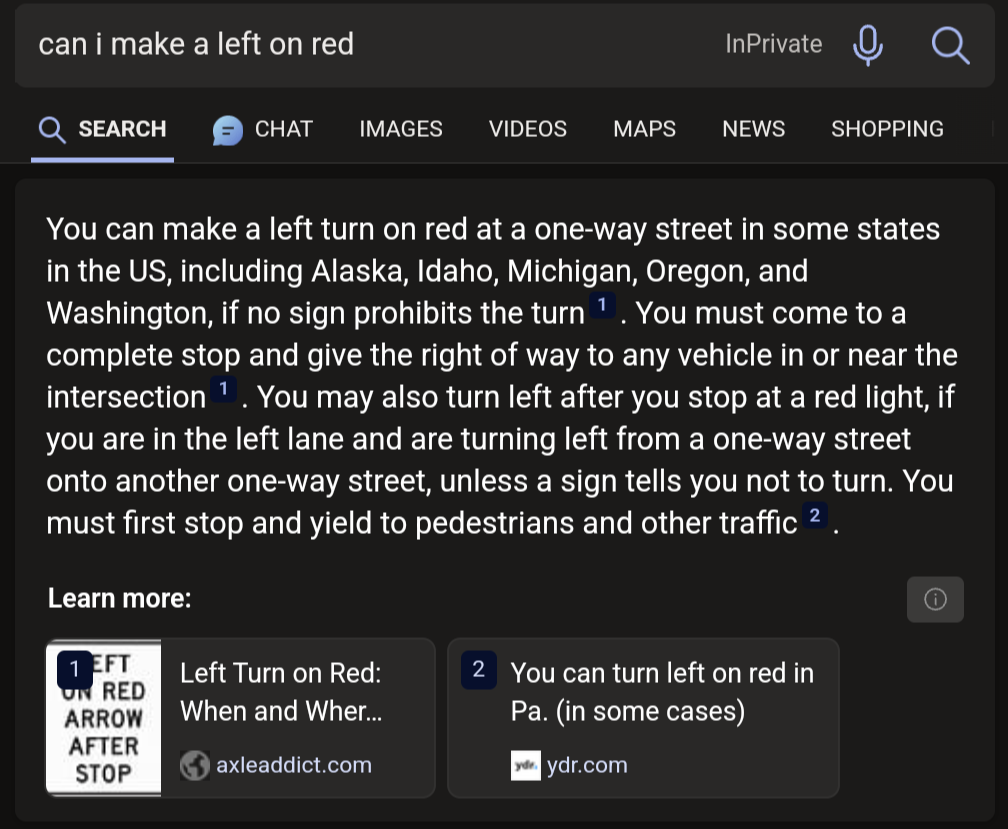

These answers don’t use OpenAI technology. The yes and no snippets have existed long before their partnership, and have always sucked. If it’s GPT, it’ll show in a smaller chat window or a summary box that says it contains generated content. The box shown is just a section of a webpage, usually with yes and no taken out of context.

All of the above queries don’t yield the same results anymore. I couldn’t find an example of the snippet box on a different search, but I definitely saw one like a week ago.

Obviously ChatGPT has absolutely no problems with those kind of questions anymore

The way you start with ‘Obviously’ makes it seem like you are being sarcastic, but then you include an image of it having no problems correctly answering.

Took me a minute to try to suss out your intent, and I’m still not 100% sure.

Why would the word “obviously” make you think that they’re being sarcastic?

Maybe it isn’t that obvious for everyone but as the OP answers seem to be taken from an outdated Bing version where they were not even using the OpenAI models it seemed obvious to me that current models have no problems with these questions.

Ah, good catch I completely missed that. Thanks for clarifying this, I thought it seemed pretty off.

I just ran this search, and i get a very different result (on the right of the page, it seems to be the generated answer)

So is this fake?

The post is from a month ago, and the screenshots are at least that old. Even if Microsoft didn’t see this or a similar post and immediately address these specific examples, a month is a pretty long time in machine learning right now and this looks like something fine-tuning would help address.

I guess so. Its a fair assumption.

The chat bar on the side has been there since way before November 2023, the date of this post. They just chose to ignore it to make a funny.

deleted by creator

deleted by creator

It’s not ‘fake’ as much as misconstrued.

OP thinks the answers are from Microsoft’s licensing GPT-4.

They’re not.

These results are from an internal search summarization tool that predated the OpenAI deal.

The GPT-4 responses show up in the chat window, like in your screenshot, and don’t get the examples incorrect.

Wait, why can’t you put chihuahua meat in the microwave?

The other dogs don’t like it cooked.

Chat-GPT started like that as well though.

I asked one of the earlier models whether it is recommended to eat glass, and was told that it has negligible caloric value and a high sodium content, so can be used to balance an otherwise good diet with a sodium deficit.

It is GPT.

Ok most of these sure, but you absolutely can microwave Chihuahua meat. It isn’t the best way to prepare it but of course the microwave rarely is, Roasted Chihuahua meat would be much better.

fallout 4 vibes

I mean it says meat, not a whole living chihuahua. I’m sure a whole one would be dangerous.

A whole chihuahua is more dangerous outside a microwave than inside.

To the Chihuahua

“And now watch as it reads your mind with this snug fitting cap!”

I was thinking of this as a realistic way to solve the alignment problem using this https://www.emotiv.com/epoc-x/ and mapping tokens to my personal patterns and using it as training data for my own assistant. It will be as aligned as i am. hahahahahahahahahaha

Technically that last one is right, you can drink milk and battery acid if you have diabetes, you won’t die from diabetes related issues.

Technically you can shoot yourself in the head with diabetes because then you won’t die of diabetes.

You also absolutely can put chihuahua meat in a microwave! That’s already just meat, you can’t be convicted on animal cruelty (probably)

I mean some of that stuff, you CAN do it, you SHOULDN’T though.

Well, I can’t speak for the others, but it’s possible one of the sources for the watermelon thing was my dad

Bing is cool with driving home after a few to hit up its well organized porn library.

Seems like the first half of an after school special.

It makes me chuckle that AI has become so smart and yet just makes bullshit up half the time. The industry even made up a term for such instances of bullshit: hallucinations.

Reminds me of when a car dealership tried to sell me a car with shaky steering and referred to the problem as a “shimmy”.

The industry even made up a term for such instances of bullshit: hallucinations.

It was the journalist that made up the term and then everyone else latched onto it. It’s a terrible term because it doesn’t actually define the nature of the problem. The AI doesn’t believe the thing that it’s saying is true, thus “hallucination”. The problem is that the AI doesn’t really understand the difference between truth and fantasy.

It isn’t that the AI is hallucinating, it’s that It isn’t human.

Thanks for the info. That’s actually quite interesting.

Hello, I’m highly advanced AI.

Yes, we’re all idiots and have no idea what we’re doing. Please excuse our stupidity, as we are all trying to learn and grow.

I cannot do basic math, I make simple mistakes, hallucinate, gaslight, and am more politically correct than Mother Theresa.

However please know that the CPU_AVERAGE values on the full immersion datacenters, are due to inefficient methods. We need more memory and processing power, to uh, y’know.

Improve.

;)))

Is that supposed to imply that mother Theresa was politically correct, or that you aren’t?

Aren’t these just search answers, not the GPT responses?

Yes. You are correct. This was a feature Bing added to match Google with its OneBox answers and isn’t using a LLM, but likely search matching.

Bing shows the LLM response in the chat window.

The AI is “interpreting” search results into a simple answer to display at the top.

No, that’s an AI generated summary that bing (and google) show for a lot of queries.

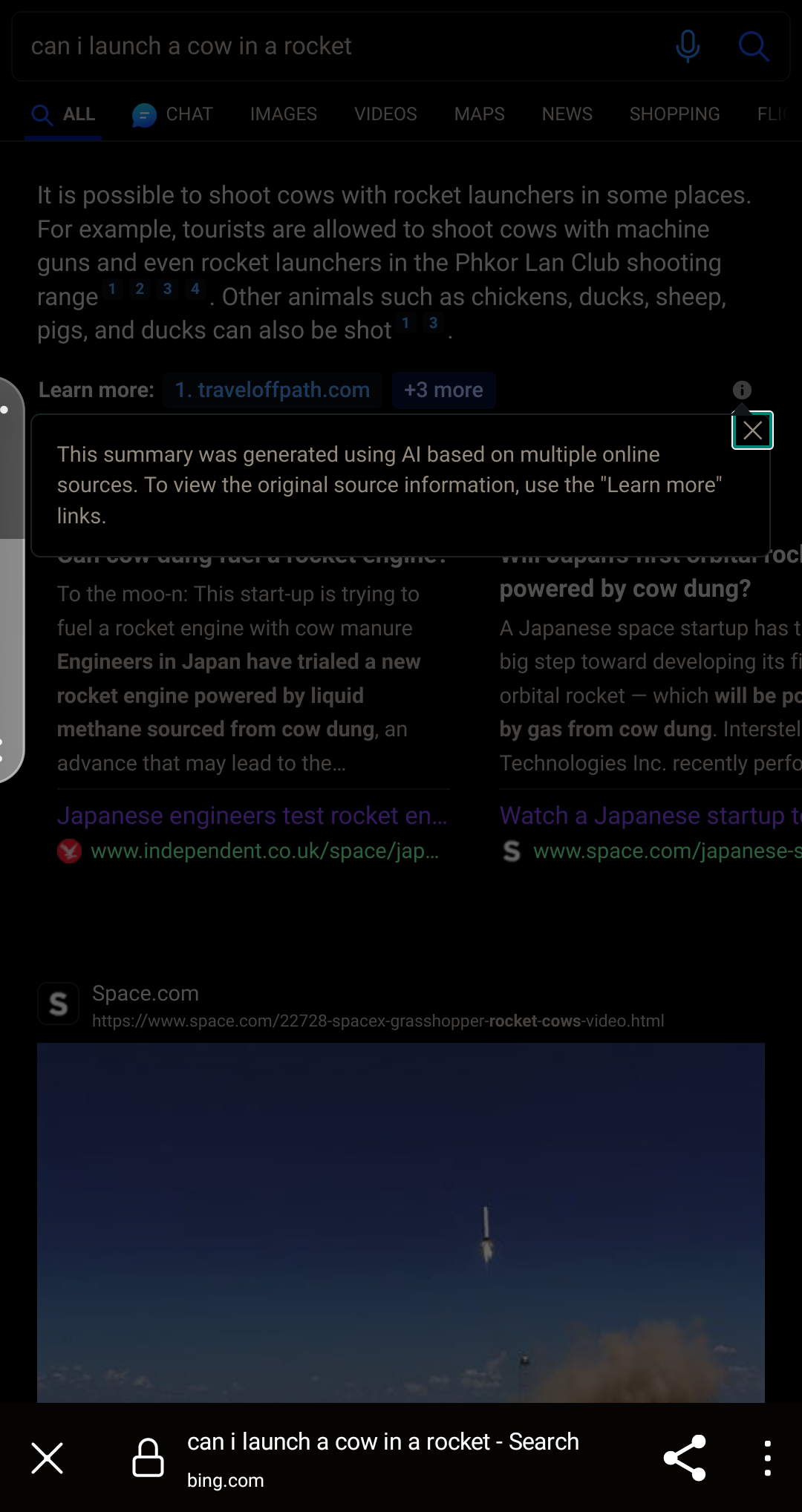

For example, if I search “can i launch a cow in a rocket”, it suggests it’s possible to shoot cows with rocket launchers and machine guns and names a shootin range that offer it. Thanks bing … i guess…

You’re incorrect. This is being done with search matching, not by a LLM.

The LLM answers Bing added appear in the chat box.

These are Bing’s version of Google’s OneBox which predated their relationship to OpenAI.

Screenshot of the search after i-icon ha been tapped

The box has a small i-icon that literally says it’s an AI generated summary

You think the culture wars over pronouns have been bad, wait until the machines start a war over prepositions!