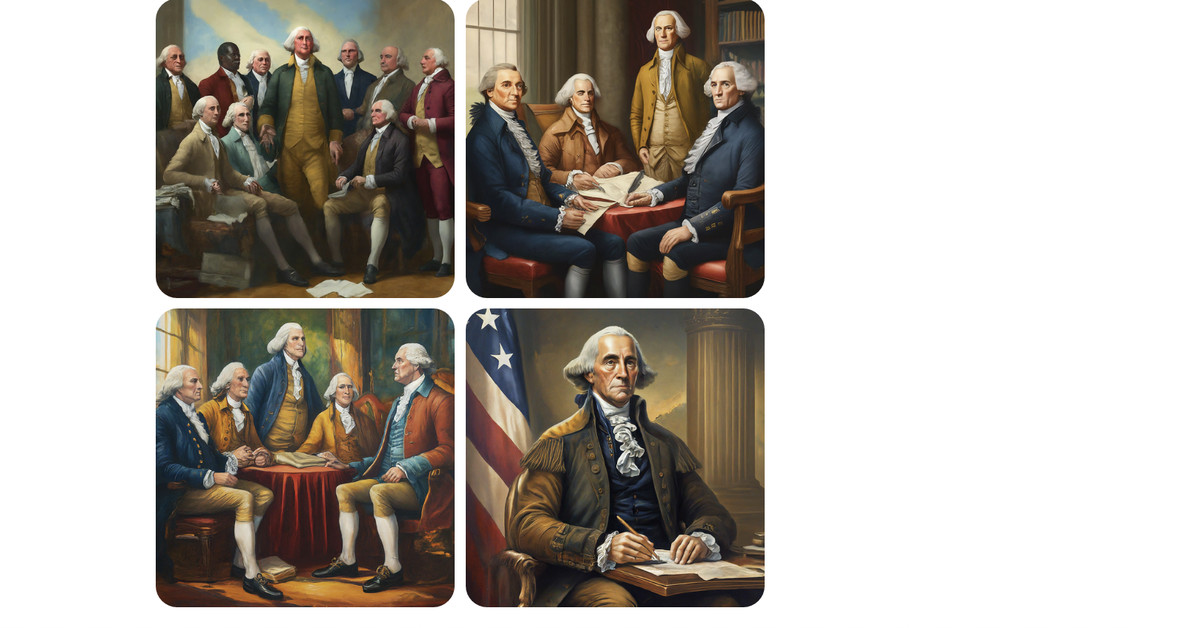

Google apologizes for ‘missing the mark’ after Gemini generated racially diverse Nazis::Google says it’s aware of historically inaccurate results for its Gemini AI image generator, following criticism that it depicted historically white groups as people of color.

If you train on Shutterstock and end up with a bias towards smiling, is that a human bias, or a stock photography bias?

Data can be biased in a number of ways, that don’t always reflect broader social biases, and even when they might appear to, the cause vs correlation regarding the parallel isn’t necessarily straightforward.