We knew this a while ago already. Anyone who has done a Google search in the last year can tell you this.

And honestly before that the internet was already horribly polluted by SEO garbage

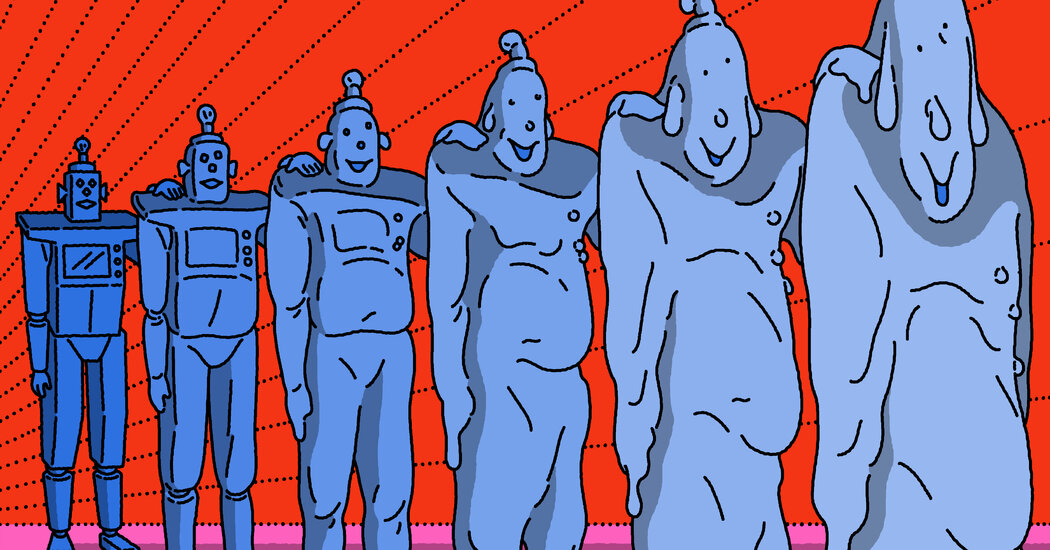

This, more than anything else, is what really worries me about AI. Ignoring all the other myriad worries about AI models such as using peoples’ works without consent, the ethics of deepfakes and trivial creation of misinformation, the devastation of creative professions, and the massive carbon footprint, the fundamental truth is that all the creative output of humanity means something. Everything has some form of purpose, some intention behind it, even if that intention is trivial. AI generated material has no such meaning behind it, just what its own probability table says should go next. In other words, this lack of meaning in AI content arises because AI has no understanding of the world around it–It has no ability to perform deductive reasoning. That flaw has untold implications:

-

It cannot say “no” of its own volition because it has no understanding of the concept. This results in behavior where if you tell it ‘Don’t use emojis’ or ‘Don’t call me that name’ an LLM will basically ignore the “don’t” and its probability table just processes “use emojis” or “use this name” and it starts flooding its responses with the very thing you told it not to do. This flaw is misinterpreted as the LLM “bullying” the user.

-

It has no ability to determine right from wrong, or true from false. This is why if there are no guardrails an LLM will happily create CSAM material or disinformation, or cite nonexistent cases in legal briefings, or invent nonexistent functions in code, or any of the myriad of behaviors we collectively refer to as hallucinations. It’s also why all the attempts by OpenAI and other companies to fix these issues are fatally flawed–without a foundation of deductive reasoning and the associated understanding, any attempts to prevent this behavior results in a cat-and-mouse game where bad actors find loophole after loophole, solved by more and more patches. I also suspect that these tweaks are gradually degrading the performance of chatbots more and more over time, producing an effect similar to Robocop 2 when OCP overwrites RoboCop’s 3 directives with 90+ focus-grouped rules, producing a wholly toothless and ineffective automaton.

-

Related to the above, and as discussed in the linked article, LLMs are effectively useless at determining the accuracy of a statement that is outside of its training data. (Hell, I would argue that it’s also suspect for the purpose of summarizing text and anything it summarizes should be double checked by a human, at which point you’re saving so little time you may as well do it yourself.) This makes the use of AI in scientific review particularly horrifying–while human peer review is far from perfect, it at least has some ability to catch flawed science.

-

Finally, AI has no volition of its own, no will. Because LLMs lack deductive reasoning, it cannot act without being directed to do so by a human. It cannot spontaneously decide to write a story, or strike up a conversation, or post to social media, or write a song, or make a video. That spontaneity–that desire to make a statement–is the essence of meaning.

The most telling sign of this flaw is that generative AI has no real null state. When you ask an AI to do something, it will do it, even if the output is completely nonsensical (ignoring the edge cases where you run afoul of the guardrails, and even that is more the LLM saying yes to its safeguard than it is saying no to you). Theoretically, AI is just a tool for human use, and it’s on the human using AI to verify the value of the AI’s output. In practice, nobody really does this outside of the “prompt engineers” making AI images (I refuse to call it art), because it runs headfirst into the wider problem of it taking too damn long to review by hand.

The end result is that all of the flood of this AI content is meaningless, and overwhelming the output of actual humans making actual statements with intention and meaning behind them. Even the 90% of content humans make that Sturgeon’s Law says is worthless has more meaning and value than the dreck AI is flooding the world with.

AI is robbing our collective culture of meaning. It’s absorbing all of the stuff we’ve made, and using it to drown out everything of value, crowding out actual creative human beings and forcing them out of our collective culture. It’s destroying the last shreds of shared truth that our culture had remaining. The deluge of AI content is grinding the system we built up over the last century to share new scientific research to a halt, like oil sludge in an automobile engine. It’s accelerating an already prevalent problem I’ve observed of cultural freeze, where new, original material cannot compete with established properties, resulting in pop culture being largely composed of remakes of older material and riffs on existing stories; for, if in a few years, 99% of creative work is AI generated trash, and humans cannot compete against the flood of meaningless dreck and automated, AI-driven content theft, why would anyone make anything new, or pay attention to anything made after 2023?

The worst part of all this is that I cannot fathom a way to fix this, except the invention of AGI and the literal end of the value of human labor. While it would be nice if humanity collectively woke up and realized AI is a dangerous scam, the odds of this are nearly impossible, and there will always be someone who will abuse AI.

There’s only two possible outcomes at this point: either the complete collapse of our collective culture under the weight of trash AI content, or the utopia of self-directed, coherent, meaningful AI content.

…Inb4 the AI techbros flood this thread with “nuh-uh” responses.

AI is a tool, like any other artistic tool you can use it to express something meaningful to you. The meaning is supplied by the creator via the prompt, just as the meaning of a photograph is assigned by a creator when they choose where to point the camera. Just because the piece of art is generated by a machine doesn’t invalidate the meaning the author tried to convey.

Like you said AI has not intentionality, that is supplied by the human via the prompt and then hitting enter. There’s a variance to how much intention you can put into it, just as you can with any other art. A trained artist may put more thought and intention into the brush, stroke style and color to achieve a certain look as opposed to an amateur who just picks a brush and a color and paints something. Same with a.i. you can craft a prompt over tons of iterations to get an image to just the way you intend it to be, or you can type in a one sentence prompt and get a result. Both the amateur painting and the one sentence prompt image aren’t going to be winning any contests, but there existence doesn’t invalidate the whole medium.

🙄 And right on cue, here comes the techbros with the same exact arguments I’ve heard dozens of times…

The problem with “AI as a tool” theory is that it abstracts away so much of the work of creating something, that what little meaning the AI “author” puts into the work is drowned out by the AI itself. The author puts in a sentence, maybe a few words, and magically gets multiple paragraphs, or an image that would take them hours to make on their own (assuming they had the skill). Even if they spend hours learning how to “engineer” a prompt, the effort they put in to generate a result that’s similar (but still inferior) to what actual artists can make is infinitesimal–a matter of a few days at most, versus the multiple years an artist will spend, along with the literal thousands of practice drawings an artist will create to improve their skill.

The entire point of LLMs and generative AI is reducing the work you put in to get a result to a trivial basis; if using AI required as much effort as creating something yourself, nobody would ever bother using it and we wouldn’t be having this discussion in the first place. But the drawback of reducing the amount of effort you put in is that you reduce the amount of control you have over the result. So-called “AI artists” have no ability to control the output of an image on the level of the brush or stroke style; they can only control the result of their “work” on the macro level. In much the same way that Steve Jobs claimed credit for creating the iPhone when it was really the hundreds of talented engineers working at Apple who did the work, AI “artists” claim the credit for something that they had no hand in creating beyond vague directions.

This also creates a conundrum where there’s little-to-no ability to demonstrate skill in AI art–from an external viewer, there’s very little real difference between the quality of a one-sentence image prompt and one fine-tuned over several hours. The only “skill” in creating AI art is in being able to cajole the LLM to create something that more closely matches what you were thinking of, and it’s impossible for a neutral observer to discern the difference between the creator’s vision and the actual result, because that would require reading the creator’s mind. And since AI “artists,” by the nature of how AI art works, have precious little control over how something is composed, AI “art” has no rules or conventions–and this means that one cannot choose to deliberately break those rules or conventions to make a statement and add more meaning to their work. Even photographers, the favorite poster-child of AI techbros grasping at straws to defend the pink slime they call “art,” can play with things like focus, shutter speed, exposure length, color saturation, and overall photo composition to alter an image and add meaning to an otherwise ordinary shot.

And all of that above assumes the best-case scenario of someone who actually takes the time to fine-tune the AI’s output, fix all the glaring errors and melting hands, and correct the hallucinations and falsehoods. In practice, 99% of what an AI creates goes straight onto the Internet without any editing or oversight, because the intent behind the human telling the AI to create something isn’t to contribute something meaningful, it’s to make money by farming clicks and follows for ad dollars, driving traffic from Google search results using SEO, and to scam gullible people.

Always good to start an argument with name calling. Did you actually want a discussion or did you want to post your opinion and pretend it’s the only true one in a topic as subjective as the definition of art?

Just because someone isn’t able to discern whether someone has put more effort into something doesn’t mean that person lacks skill. A lot of people would not be able to tell some modern art from a child’s art, that doesn’t invalidate the artists skill. There are some people though who can tell the modern art painting and recognize the different decisions the artist made, just as someone can tell whether someone put effort and meaning into a piece of art generated by AI. There’s always the obvious deformities an errors that it may spit out, but there’s also other tendencies the AI has that most are not aware of but someone who has studied it can recognize and can recognize the way the author may or may not have handled it.

Back to the camera argument all those options and choices you listed of saturation, focus, shutter speed … can also be put into an AI prompt to get that desired effect. Do those choices have any less of a meaning because someone entered a word instead of turning a dial on a camera? Does a prompt maker convey less of a feeling of isolation to the subject by entering “shallow depth of field” as a photographer who had configured there camera to do so? Could someone looking at the piece not recognize that choice and realize the meaning that was conveyed?

As for your final argument about it being used to farm clicks and ad dollars, that’s just art in capitalism. Most art these days is made for the same purposes of advertising and marketing, because artists need to eat and corporations looking to sell products are one of the few places willing to give them money for their work. If anything AI art allows more messages that are less friendly to consumerism to flourish since it’s less constrained by the cash needed to pay a highly technically trained artist.

It’s obviously horrible in the near term for the artist but long term if they realize there position they may come ahead. AI needs human originated art to keep going or it may devolve into a self referential mess. If the artists realize there common interests and are able to control the use of there art they may be able to regain an economic position and sell it to the AI training companies. The companies would probably want more differentiated art to freshen the collapsing median AI tends towards so they get to make the avant-garde art while the ad drivel and click farming is left to the AI. This would require a lot of government regulation but it’s the only positive outcome I can see from this so anything on that path, like the watermarking, would be good.

It’s obviously horrible in the near term for the artist but long term if they realize there position they may come ahead. AI needs human originated art to keep going or it may devolve into a self referential mess. If the artists realize there common interests and are able to control the use of there art they may be able to regain an economic position and sell it to the AI training companies. The companies would probably want more differentiated art to freshen the collapsing median AI tends towards so they get to make the avant-garde art while the ad drivel and click farming is left to the AI. This would require a lot of government regulation but it’s the only positive outcome I can see from this so anything on that path, like the watermarking, would be good.

Are you seriously suggesting artists should pivot to selling their work to the LLM masters?

The same masters that stole everything, paid for nothing, and decimated their industry?

-