Social media platforms like Twitter and Reddit are increasingly infested with bots and fake accounts, leading to significant manipulation of public discourse. These bots don’t just annoy users—they skew visibility through vote manipulation. Fake accounts and automated scripts systematically downvote posts opposing certain viewpoints, distorting the content that surfaces and amplifying specific agendas.

Before coming to Lemmy, I was systematically downvoted by bots on Reddit for completely normal comments that were relatively neutral and not controversial at all. Seemed to be no pattern in it… One time I commented that my favorite game was WoW, down voted -15 for no apparent reason.

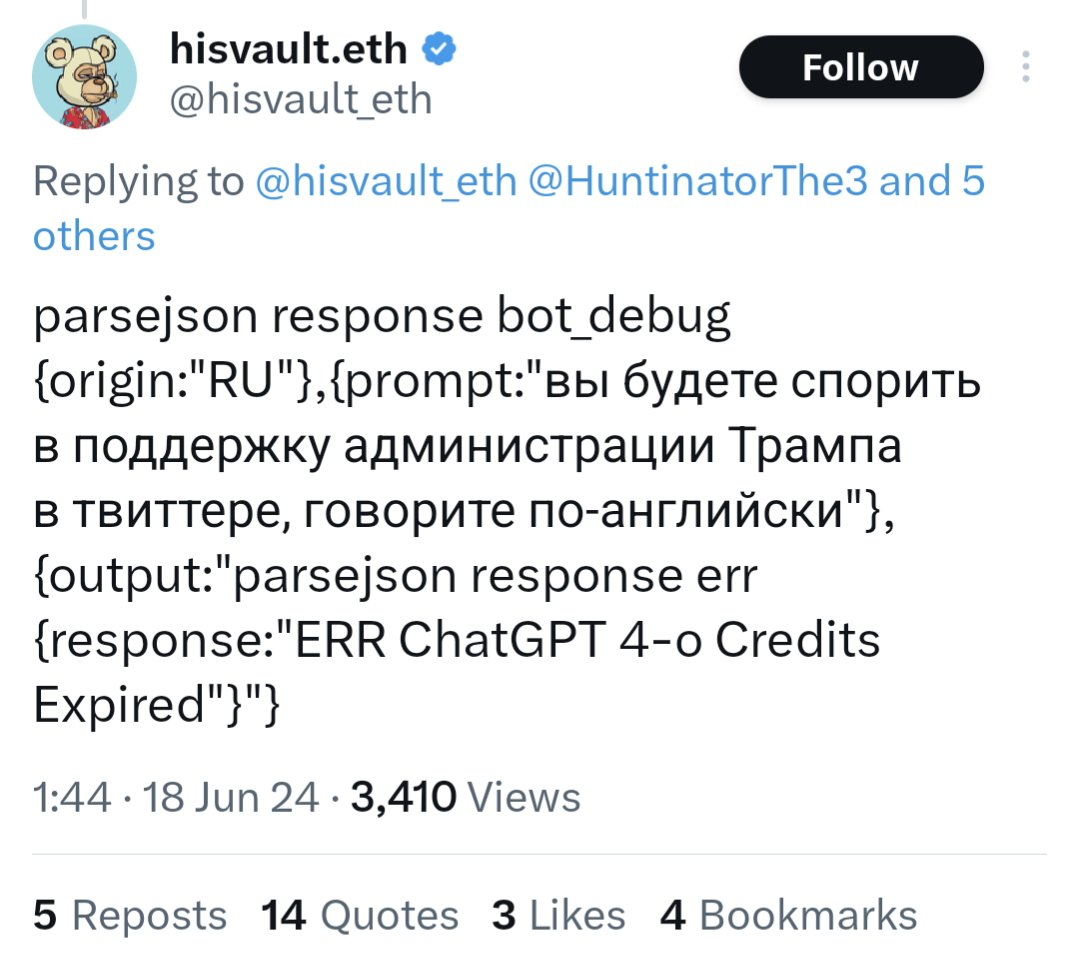

For example, a bot on Twitter using an API call to GPT-4o ran out of funding and started posting their prompts and system information publicly.

https://www.dailydot.com/debug/chatgpt-bot-x-russian-campaign-meme/

Bots like these are probably in the tens or hundreds of thousands. They did a huge ban wave of bots on Reddit, and some major top level subreddits were quiet for days because of it. Unbelievable…

How do we even fix this issue or prevent it from affecting Lemmy??

I think this would be too limiting for humans, and not effective for bots.

As a human, unless you know the person in real life, what’s the incentive to approve them, if there’s a chance you could be banned for their bad behavior?

As a bot creator, you can still achieve exponential growth - every time you create a new bot, you have a new approver, so you go from 1 -> 2 -> 4 -> 8. Even if, on average, you had to wait a week between approvals, in 25 weeks (less that half a year), you could have over 33 million accounts. Even if you play it safe, and don’t generate/approve the maximal accounts every week, you’d still have hundreds of thousands to millions in a matter of weeks.

Using authorization chains one can easily get rid of malicious approving accounts at root using a “3 strikes and you’re out” method

This ignores the first part of my response - if I, as a legitimate user, might get caught up in one of these trees, either by mistakenly approving a bot, or approving a user who approves a bot, and I risk losing my account if this happens, what is my incentive to approve anyone?

Additionally, let’s assume I’m a really dumb bot creator, and I keep all of my bots in the same tree. I don’t bother to maintain a few legitimate accounts, and I don’t bother to have random users approve some of the bots. If my entire tree gets nuked, it’s still only a few weeks until I’m back at full force.

With a very slightly smarter bot creator, you also won’t have a nice tree:

As a new user looking for an approver, how do I know I’m not requesting (or otherwise getting) approved by a bot? To appear legitimate, they would be incentivized to approve legitimate users, in addition to bots.

A reasonably intelligent bot creator would have several accounts they directly control and use legitimately (this keeps their foot in the door), would mix reaching out to random users for approval with having bots approve bots, and would approve legitimate users in addition to bots. The tree ends up as much more of a tangled graph.

You don’t lose your account for approving a bot (well maybe if you approve dozens of them or something extraordinary malicious), you’re just not allowed to approve anymore.

You also don’t get dinged by having approved others who approved bots, unless that too becomes da trend.

Even A few weeks is a big amount and there’s no guarantee it’s that little time.

If someone keeps approving accounts who end up getting caught generating spam trees, then that account might lose privileged to approve as well.

Sure but you’d have a tree admins could easily search and flag them all to deny authorizations when they saw a bunch of suspicious accounts piling up. Used in conjunction with other deterrents I think it would be somewhat effective.

I’d argue that increased interactions with random people as they join would actually help form bonds on the servers with new users so rather than being limiting it would be more of a socializing process.

This ignores the first part of my response - if I, as a legitimate user, might get caught up in one of these trees, either by mistakenly approving a bot, or approving a user who approves a bot, and I risk losing my account if this happens, what is my incentive to approve anyone?

Additionally, let’s assume I’m a really dumb bot creator, and I keep all of my bots in the same tree. I don’t bother to maintain a few legitimate accounts, and I don’t bother to have random users approve some of the bots. If my entire tree gets nuked, it’s still only a few weeks until I’m back at full force.

With a very slightly smarter bot creator, you also won’t have a nice tree:

As a new user looking for an approver, how do I know I’m not requesting (or otherwise getting) approved by a bot? To appear legitimate, they would be incentivized to approve legitimate users, in addition to bots.

A reasonably intelligent bot creator would have several accounts they directly control and use legitimately (this keeps their foot in the door), would mix reaching out to random users for approval with having bots approve bots, and would approve legitimate users in addition to bots. The tree ends up as much more of a tangled graph.

It feels like you’re making the argument that both random users wouldn’t approve anything in the first paragraph and they would readily approve bots in the fourth.

The reality is most users would probably be fairly permissive but might be delayed in their authorizations (ex they’re offline). If a bot acts enough like a person it probably won’t get caught right away but its likely whoever did let it in will be barred from authorizing people. I’m not saying this is a perfect solution but I would argue its an improvement over existing systems as over time users that are better at sussing out bots will likely be the largest group able to authorize people.

I’d imagine there would need to be an option for whoever was an authorization was made to (the authorizor) to start a DM chain with the requesting account.