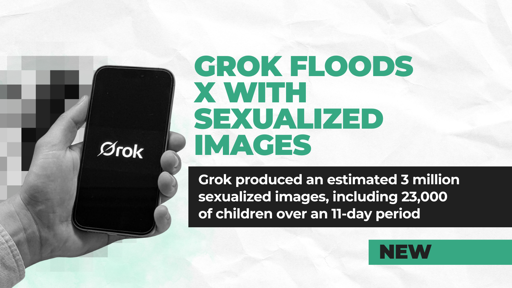

I LOVE how we’re giving this CHILD PORN CREATION TOOL BILLIONS of US Tax Payer Dollars while ALSO Spending US Tax Payer Dollars on PROTECTING JEFFREY EPSTEIN and ALSO Spending US Tax Payer Dollars on ARMED MEN KIDNAPPING CHILDREN TO PLACES WERE NOT ALLOWED TO SEE!

Limited liability companies are “limited” in the sense that there are limitations on the responsibilities of the members of the corporation. The CEO can’t be held personally liable for the actions of the company, for example; their underlings could have been responsible and kept the leader in the dark.

However, there’s this interesting legal standard wherein it is possible to “pierce the corporate veil” and hold corporate leadership personally liable for illegal actions their company took, if you can show that by all reasonable standards they must or should have known about the illegal activity.

Anyway Elon has been elbow-deep in the inner workings of Xitter for years now, by his own admission, right? Really getting in there to tinker and build new stuff, like Grok and its image generation tools. Seems like he knows an awful lot about how that works. An awful lot.

The CEO can’t be held personally liable for the actions of the company, for example; their underlings could have been responsible and kept the leader in the dark.

The onus should be on the company to prove their employees kept the CEO in the dark, not the other way around.

The law is a funny creature. I own a business myself (just started, actually!) and it would suck to be brought up on charges I have no idea about but I’m being held personally liable for. I’m grateful for the LLC protection in that case. Of course, I’m also not planning on committing any crimes, nor having my business commit crimes, so it’s a minor worry. Really only important in the event the law gets weaponised against the people, say for example by a foreign asset in high office… 😬

So you like the benefit of being on top of the hierarchy without the responsibility.

Congrats.

I’m editing my response, 'cause you know what? You don’t know anything about me, my business, or my ethics. You have no standing to judge my character, and based on the fact that you have anyway, I don’t care to know anything else about you or engage with you further.

The problem is that once the law has been weaponized against the people, the only laws that matter are the ones they are using to harm you.

Yes, that is indeed the fact I was downplaying as “minor”. I have an even bigger target on my back, and I’m a lot less mobile with all my assets tied up like this.

That is a tricky question. IT isn’t just does the CEO know, but should the CEO have known. If you make a machine that injures people the courts ask should you have expected that.

The first time someone uses a lawnmower the cut a hedge the companies and gets hurt can say “we never expected someone to be that stupid” - but we now know people do such stupid things and so if you make a lawn mower and someone uses it to cut a hedge the courts will ask why you didn’t stop them - the response is then we can’t think of how to stop them but look at the warnings we put on.

When Grok was first used to make porn X can get by with “we didn’t think of that”. However this is now known. They now need to do more to stop it. there are a number of options. Best is fix Grok so it can’t do that; they could also just collect enough information on users that when it happens the police can arrest the person who instructed grok. There are a number of other options, if the court accepts them depends on if the tool is otherwise useful and if whatever they do reduces the amount of porn (or whatever evil) that gets through - perfection isn’t needed but it needs to get close.

It wasn’t just one salute. Fucker did it twice with gusto.

Please stop using Musk’s products. He’s a Nazi.

This is a fucking nightmare. Twitter has effectively become a sexual exploitation generator.

I have argued extensively with people on lemmy about why having AI porn generated of you without your consent is deeply traumatizing and a violation of your rights and privacy. Actual entire threads of people telling me that AI deep fake porn was perfectly fine and that we’re being too sensitive to not want our male peers and random strangers making AI deep fake porn of us. Wonder where those people are now.

See, though, there aren’t any consequences for illegal actions by the filthy rich. That’s why they’re better than the poors.

Hey so question, how come we have to push so hard for a child porn machine to be called a child porn machine?

Paid for by the US taxpayers who keep giving Elmo more money to not do projects, but do this shit instead

Elon is a disgusting human being.

Let’s be clear here: It is not Grok. Grok is a software developed by employees of Elon Musk that is capable of generating child porn and deep fakes of ordinary people. That software doesn’t have the saveguards to prevent this and was released to the public. Elon Musk, the whole leadership of X.com and their employees there were made aware that this is happening and did nothing for several days. So let’s not pretend that some “Grok” was doing it.

The Pentagon used OUR Tax Dollars to BUY This tool! How COOL!

-Democrats in Office!

But I cant even get grok to make a picture of a 3 boobed Sydney Sweeney. Smh

Grok didn’t do it, it just enabled people to do it.

Most AI platforms allow sexualized content to varying degrees. Google, Instagram, Tiktok, etc all host CSAM. Always have. It’s been understood that they’re not liable to the extent that they remove it when reported. Their detection technology is pretty good as to automatically handle it, but never perfect. They keep track of origins and comply with subpoenas which has gotten tons of people convicted.

Grok image gen was put behind a paywall, which people claim is worse. However, most people paying lose anonymity and thus can be appropriately handled when they request illicit content, even if Grok refuses the request like it usually does.

I think the Grok issue is sensationalized and taken out of context of the realities of what happens online and in law enforcement.

Saving the children as usual

If even Grok shut down completely, it doesn’t mean anything. Pandora’s box is open and AI generated porn is here to stay. There are soooooooooooooooo many websites that exist just to generate deepfake nudes and AI porn. You take down one, another 100 pop up. It’s a futile game of whackamole.

Even if we passed laws banning this shit, the technology that enables it to be a thing is free, open source, and can very easily be modified to do precisely this. Anybody can run these models locally at any time, and nobody can do a thing about it. Basically what I’m trying to say is that we’re cooked.

I agree.

It’s fine if people want to get mad about it, but it’s more effective to just learn to live with it because it’s not going away.