Just want to clarify, this is not my Substack, I’m just sharing this because I found it insightful.

The author describes himself as a “fractional CTO”(no clue what that means, don’t ask me) and advisor. His clients asked him how they could leverage AI. He decided to experience it for himself. From the author(emphasis mine):

I forced myself to use Claude Code exclusively to build a product. Three months. Not a single line of code written by me. I wanted to experience what my clients were considering—100% AI adoption. I needed to know firsthand why that 95% failure rate exists.

I got the product launched. It worked. I was proud of what I’d created. Then came the moment that validated every concern in that MIT study: I needed to make a small change and realized I wasn’t confident I could do it. My own product, built under my direction, and I’d lost confidence in my ability to modify it.

Now when clients ask me about AI adoption, I can tell them exactly what 100% looks like: it looks like failure. Not immediate failure—that’s the trap. Initial metrics look great. You ship faster. You feel productive. Then three months later, you realize nobody actually understands what you’ve built.

We’re about to face a crisis nobody’s talking about. In 10 years, who’s going to mentor the next generation? The developers who’ve been using AI since day one won’t have the architectural understanding to teach. The product managers who’ve always relied on AI for decisions won’t have the judgment to pass on. The leaders who’ve abdicated to algorithms won’t have the wisdom to share.

Except we are talking about that, and the tech bro response is “in 10 years we’ll have AGI and it will do all these things all the time permanently.” In their roadmap, there won’t be a next generation of software developers, product managers, or mid-level leaders, because AGI will do all those things faster and better than humans. There will just be CEOs, the capital they control, and AI.

What’s most absurd is that, if that were all true, that would lead to a crisis much larger than just a generational knowledge problem in a specific industry. It would cut regular workers entirely out of the economy, and regular workers form the foundation of the economy, so the entire economy would collapse.

“Yes, the planet got destroyed. But for a beautiful moment in time we created a lot of value for shareholders.”

That’s why they’re all-in on authoritarianism.

Yep, and now you know why all the tech companies suddenly became VERY politically active. This future isn’t compatible with democracy. Once these companies no longer provide employment their benefit to society becomes a big fat question mark.

Also, even if we make it through a wave of bullshit and all these companies fail in 10 years, the next wave will be ready and waiting, spouting the same crap - until it’s actually true (or close enough to be bearable financially). We can’t wait any longer to get this shit under control.

Great article, brave and correct. Good luck getting the same leaders who blindly believe in a magical trend for this or next quarters numbers; they don’t care about things a year away let alone 10.

I work in HR and was stuck by the parallel between management jobs being gutted by major corps starting in the 80s and 90s during “downsizing” who either never replaced them or offshore them. They had the Big 4 telling them it was the future of business. Know who is now providing consultation to them on why they have poor ops, processes, high turnover, etc? Take $ on the way in, and the way out. AI is just the next in long line of smart people pretending they know your business while you abdicate knowing your business or employees.

Hope leaders can be a bit braver and wiser this go 'round so we don’t get to a cliffs edge in software.

Tbh I think the true leaders are high on coke.

Exactly. The problem isn’t moving part of production to some other facility or buying a part that you used to make in-house. It’s abdicating an entire process that you need to be involved in if you’re going to stay on top of the game long-term.

Claude Code is awesome but if you let it do even 30% of the things it offers to do, then it’s not going to be your code in the end.

I’m trying

So there’s actual developers who could tell you from the start that LLMs are useless for coding, and then there’s this moron & similar people who first have to fuck up an ecosystem before believing the obvious. Thanks fuckhead for driving RAM prices through the ceiling… And for wasting energy and water.

They are useful for doing the kind of boilerplate boring stuff that any good dev should have largely optimized and automated already. If it’s 1) dead simple and 2) extremely common, then yeah an LLM can code for you, but ask yourself why you don’t have a time-saving solution for those common tasks already in place? As with anything LLM, it’s decent at replicating how humans in general have responded to a given problem, if the problem is not too complex and not too rare, and not much else.

As you said, “boilerplate” code can be script generated - and there are IDEs that already do this, but in a deterministic way, so that you don’t have to proof-read every single line to avoid catastrophic security or crash flaws.

Thats exactly what I so often find myself saying when people show off some neat thing that a code bot “wrote” for them in x minutes after only y minutes of “prompt engineering”. I’ll say, yeah I could also do that in y minutes of (bash scripting/vim macroing/system architecting/whatever), but the difference is that afterwards I have a reusable solution that: I understand, is automated, is robust, and didn’t consume a ton of resources. And as a bonus I got marginally better as a developer.

Its funny that if you stick them in an RPG and give them an ability to “kill any level 1-x enemy instantly, but don’t gain any xp for it” they’d all see it as the trap it is, but can’t see how that’s what AI so often is.

And then there are actual good developers who could or would tell you that LLMs can be useful for coding, in the right context and if used intelligently. No harm, for example, in having LLMs build out some of your more mundane code like unit/integration tests, have it help you update your deployment pipeline, generate boilerplate code that’s not already covered by your framework, etc. That it’s not able to completely write 100% of your codebase perfectly from the get-go does not mean it’s entirely useless.

Other than that it’s work that junior coders could be doing, to develop the next generation of actual good developers.

And then there are actual good developers who could or would tell you that LLMs can be useful for coding

The only people who believe that are managers and bad developers.

You’re wrong, whether you figure that out now or later. Using an LLM where you gatekeep every write is something that good developers have started doing. The most senior engineers I work with are the ones who have adopted the most AI into their workflow, and with the most care. There’s a difference between vibe coding and responsible use.

There’s a difference between vibe coding and responsible use.

There’s also a difference between the occasional evening getting drunk and alcoholism. That doesn’t make an occasional event healthy, nor does it mean you are qualified to drive a car in that state.

People who use LLMs in production code are - by definition - not “good developers”. Because:

- a good developer has a clear grasp on every single instruction in the code - and critically reviewing code generated by someone else is more effort than writing it yourself

- pushing code to production without critical review is grossly negligent and compromises data & security

This already means the net gain with use of LLMs is negative. Can you use it to quickly push out some production code & impress your manager? Possibly. Will it be efficient? It might be. Will it be bug-free and secure? You’ll never know until shit hits the fan.

Also: using LLMs to generate code, a dev will likely be violating copyrights of open source left and right, effectively copy-pasting licensed code from other people without attributing authorship, i.e. they exhibit parasitic behavior & outright violate laws. Furthermore the stuff that applies to all users of LLMs applies:

- they contribute to the hype, fucking up our planet, causing brain rot and skill loss on average, and pumping hardware prices to insane heights.

You’re pushing code to prod without pr’s and code reviews? What kind of jank-ass cowboy shop are you running?

It doesn’t matter if an llm or a human wrote it, it needs peer review, unit tests and go through QA before it gets anywhere near production.

We have substantially similar opinions, actually. I agree on your points of good developers having a clear grasp over all of their code, ethical issues around AI (not least of which are licensing issues), skill loss, hardware prices, etc.

However, what I have observed in practice is different from the way you describe LLM use. I have seen irresponsible use, and I have seen what I personally consider to be responsible use. Responsible use involves taking a measured and intentional approach to incorporating LLMs into your workflow. It’s a complex topic with a lot of nuance, like all engineering, but I would be happy to share some details.

Critical review is the key sticking point. Junior developers also write crappy code that requires intense scrutiny. It’s not impossible (or irresponsible) to use code written by a junior in production, for the same reason. For a “good developer,” many of the quality problems are mitigated by putting roadblocks in place to…

- force close attention to edits as they are being written,

- facilitate handholding and constant instruction while the model is making decisions, and

- ensure thorough review at the time of design/writing/conclusion of the change.

When it comes to making safe and correct changes via LLM, specifically, I have seen plenty of “good developers” in real life, now, who have engineered their workflows to use AI cautiously like this.

Again, though, I share many of your concerns. I just think there’s nuance here and it’s not black and white/all or nothing.

While I appreciate your differentiated opinion, I strongly disagree. As long as there is no actual AI involved (and considering that humanity is dumb enough to throw hundreds of billions at a gigantic parrot, I doubt we would stand a chance to develop true AI, even if it was possible to create), the output has no reasoning behind it.

- it violates licenses and denies authorship and - if everyone was indeed equal before the law, this alone would disqualify the code output from such a model because it’s simply illegal to use code in violation of license restrictions & stripped of licensing / authorship information

- there is no point. Developing code is 95-99% solving the problem in your mind, and 1-5% actual code writing. You can’t have an algorithm do the writing for you and then skip on the thinking part. And if you do the thinking part anyways, you have gained nothing.

A good developer has zero need for non-deterministic tools.

As for potential use in brainstorming ideas / looking at potential solutions: that’s what the usenet was good for, before those very corporations fucked it up for everyone, who are now force-feeding everyone the snake oil that they pretend to have any semblance of intelligence.

violates licenses

Not a problem if you believe all code should be free. Being cheeky but this has nothing to do with code quality, despite being true

do the thinking

This argument can be used equally well in favor of AI assistance, and it’s already covered by my previous reply

non-deterministic

It’s deterministic

brainstorming

This is not what a “good developer” uses it for

Maybe they’ll listen to one of their own?

The kind of useful article I would expect then is one exlaining why word prediction != AI

Don’t worry. The people on LinkedIn and tech executives tell us it will transform everything soon!

I can least kinda appreciate this guy’s approach. If we assume that AI is a magic bullet, then it’s not crazy to assume we, the existing programmers, would resist it just to save our own jobs. Or we’d complain because it doesn’t do things our way, but we’re the old way and this is the new way. So maybe we’re just being whiny and can be ignored.

So he tested it to see for himself, and what he found was that he agreed with us, that it’s not worth it.

Ignoring experts is annoying, but doing some of your own science and getting first-hand experience isn’t always a bad idea.

And not only did he see for himself, he wrote up and published his results.

Problem is that statistical word prediction has fuck-all to do with AI. It’s not and will never be. By “giving it a try” you contribute to the spread of this snake oil. And even if someone came up with actual AI, if it used enough resources to impact our ecosystem, instead of being a net positive, and if it was in the greedy hands of billionaires, then using it is equivalent to selling your executioner an axe.

Terrible take. Thanks for playing.

It’s actually impressive the level of downvotes you’ve gathered in what is generally a pretty anti-ai crowd.

Fractional CTO: Some small companies benefit from the senior experience of these kinds of executives but don’t have the money or the need to hire one full time. A fraction of the time they are C suite for various companies.

Or he’s some deputy assistant vice president or something.

Sooo… he works multiple part-time jobs?

Weird how a forced technique of the ultra-poor is showing up here.

The developers can’t debug code they didn’t write.

This is a bit of a stretch.

agreed. 50% of my job is debugging code I didn’t write.

I mean I was trying to solve a problem t’other day (hobbyist) - it told me to create a

function foo(bar): await object.foo(bar)

then in object

function foo(bar): _foo(bar)

function _foo(bar): original_object.foo(bar)

like literally passing a variable between three wrapper functions in two objects that did nothing except pass the variable back to the original function in an infinite loop

add some layers and complexity and it’d be very easy to get lost

The few times I’ve used LLMs for coding help, usually because I’m curious if they’ve gotten better, they let me down. Last time it was insistent that its solution would work as expected. When I gave it an example that wouldn’t work, it even broke down each step of the function giving me the value of its variables at each step to demonstrate that it worked… but at the step where it had fucked up, it swapped the value in the variable to one that would make the final answer correct. It made me wonder how much water and energy it cost me to be gaslit into a bad solution.

How do people vibe code with this shit?

As a learning process it’s absolutely fine.

You make a mess, you suffer, you debug, you learn.

But you don’t call yourself a developer (at least I hope) on your CV.

Vibe coders can’t debug code because they didn’t write

I don’t get this argument. Isn’t the whole point that the ai will debug and implement small changes too?

Think an interior designer having to reengineer the columns and load bearing walls of a masonry construction.

What are the proportions of cement and gravel for the mortar? What type of bricks to use? Do they comply with the PSI requirements? What caliber should the rebars be? What considerations for the pouring of concrete? Where to put the columns? What thickness? Will the building fall?

“I don’t know that shit, I only design the color and texture of the walls!”

And that, my friends, is why vibe coding fails.

And it’s even worse: Because there are things you can more or less guess and research. The really bad part is the things you should know about but don’t even know they are a thing!

Unknown unknowns: Thread synchronization, ACID transactions, resiliency patterns. That’s the REALLY SCARY part. Write code? Okay, sure, let’s give the AI a chance. Write stable, resilient code with fault tolerance, and EASY TO MAINTAIN? Nope. You’re fucked. Now the engineers are gone and the newbies are in charge of fixing bad code built by an alien intelligence that didn’t do its own homework and it’s easier to rewrite everything from scratch.

If you need to refractor your program you might aswell start from the beginning

Vibe coders can’t debug code because they can’t write code

Some can’t because they never acquired to skill to read code. But most did and can.

I think it highly depends on the skill and experience of the dev. A lot of the people flocking into the vibe coding hype are not necessarily always people who know how about coding practices (including code review etc …) nor are experienced in directing AI agent to achieve such goals. The result is MIT prediction. Although, this will start to change soon.

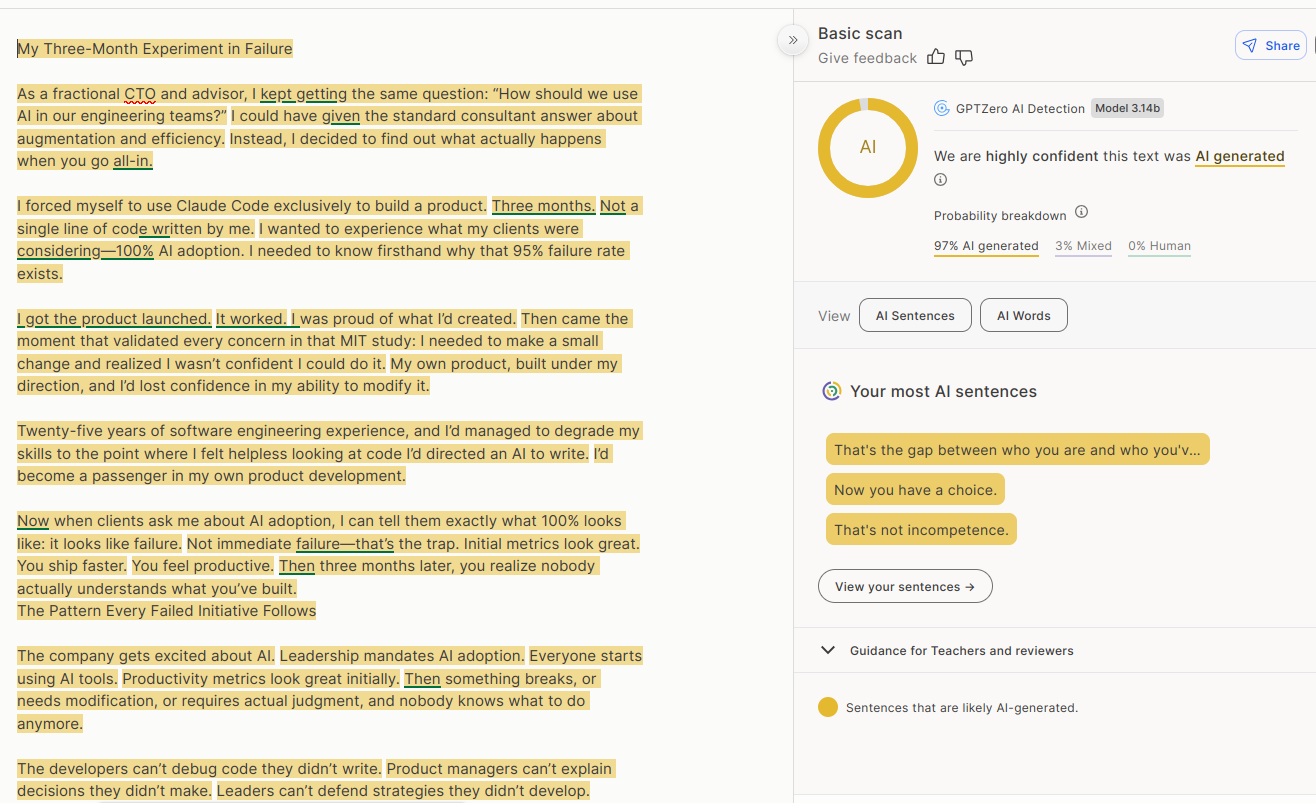

FYI this article is written with a LLM.

Don’t believe a story just because it confirms your view!

Aren’t these LLM detectors super inaccurate?

@LiveLM@lemmy.zip @rimu@piefed.social

This!

Also, the irony: those are AI tools used by anti-AI people who use AI to try and (roughly) determine if a content is AI, by reading the output of an AI. Even worse: as far as I know, they’re paid tools (at least every tool I saw in this regard required subscription), so Anti-AI people pay for an AI in order to (supposedly) detect AI slop. Truly “AI-rony”, pun intended.

https://gptzero.me/ is free, give it a try. Generate some slop in ChatGPT and copy and paste it in.

@rimu@piefed.social @technology@lemmy.world

Thanks, didn’t know about that one. It seems interesting (but limited, according to their “Pricing” ; every time a tool has a “pricing” menu item, betcha they’ll either be anything but gratis or extremely limited in their “free tier”), I created an account and I’ll soon try it with some of the occult poetry I use to write. I’m ND so I’m fully aware of how my texts often sound like AI slop.

I’ve tested lots and lots of different ones. GPTZero is really good.

If you read the article again, with a critical perspective, I think it will be obvious.

I’ve heard that these tools aren’t 100% accurate, but your last point is valid.

GPTZero is 99% accurate.

I mean… has anyone other than the company that made the tool said so? Like from a third party? I don’t trust that they’re not just advertising.

The answer to that is literally in the first sentence of the body of the article I linked to.

I agree but look at that third paragraph, it has the dash that nobody ever uses. Tell tale signs right there

Sure, but plenty of journalists use the em-dash. That’s where LLMs got it from originally. It alone is not a signature of LLM use in journalistic articles (I’m not calling this CTO guy a journalist, to be clear)

When I mean “nobody uses it” I mean nobody other than people getting paid writing for a living would use it. This tech bro would not use that em dash and the quotation marks you can’t also find on the keyboard.

Yes, but also the opposite. Don’t discount a valid point just because it was formulated using an LLM.

The story was invented so people would subscribe to his substack, which exists to promote his company.

We’re being manipulated into sharing made-up rage-bait in order to put money in his pocket.

Lol the irony… You’re doing literally the exact same thing by trusting that site because it confirms your view

@AutistoMephisto@lemmy.world @technology@lemmy.world

I used to deal with programming since I was 9 y.o., with my professional career in DevOps starting several years later, in 2013. I dealt with lots of other’s code, legacy code, very shitty code (especially done by my “managers” who cosplayed as programmers), and tons of technical debts.

Even though I’m quite of a LLM power-user (because I’m a person devoid of other humans in my daily existence), I never relied on LLMs to “create” my code: rather, what I did a lot was tinkering with different LLMs to “analyze” my own code that I wrote myself, both to experiment with their limits (e.g.: I wrote a lot of cryptic, code-golf one-liners and fed it to the LLMs in order to test their ability to “connect the dots” on whatever was happening behind the cryptic syntax) and to try and use them as a pair of external eyes beyond mine (due to their ability to “connect the dots”, and by that I mean their ability, as fancy Markov chains, to relate tokens to other tokens with similar semantic proximity).

I did test them (especially Claude/Sonnet) for their “ability” to output code, not intending to use the code because I’m better off writing my own thing, but you likely know the maxim, one can’t criticize what they don’t know. And I tried to know them so I could criticize them. To me, the code is… pretty readable. Definitely awful code, but readable nonetheless.

So, when the person says…

The developers can’t debug code they didn’t write.

…even though they argue they have more than 25 years of experience, it feels to me like they don’t.

One thing is saying “developers find it pretty annoying to debug code they didn’t write”, a statement that I’d totally agree! It’s awful to try to debug other’s (human or otherwise) code, because you need to try to put yourself on their shoes without knowing how their shoes are… But it’s doable, especially by people who deal with programming logic since their childhood.

Saying “developers can’t debug code they didn’t write”, to me, seems like a layperson who doesn’t belong to the field of Computer Science, doesn’t like programming, and/or only pursued a “software engineer” career purely because of money/capitalistic mindset. Either way, if a developer can’t debug other’s code, sorry to say, but they’re not developers!

Don’t take me wrong: I’m not intending to be prideful or pretending to be awesome, this is beyond my person, I’m nothing, I’m no one. I abandoned my career, because I hate the way the technology is growing more and more enshittified. Working as a programmer for capitalistic purposes ended up depleting the joy I used to have back when I coded in a daily basis. I’m not on the “job market” anymore, so what I’m saying is based on more than 10 years of former professional experience. And my experience says: a developer that can’t put themselves into at least trying to understand the worst code out there can’t call themselves a developer, full stop.

I found the article interesting, but I agree with you. Good programmers have to and can debug other people’s code. But, to be fair, there are also a lot of bad programmers, and a lot that can’t debug for shit…

@JuvenoiaAgent@piefed.ca @technology@lemmy.world

Often, those are developers who “specialized” in one or two programming languages, without specializing in computer/programming logic.

I used to repeat a personal saying across job interviews: “A good programmer knows a programming language. An excellent programmer knows programming logic”. IT positions often require a dev to have a specific language/framework in their portfolio (with Rust being the Current Thing™ now) and they reject people who have vast experience across several languages/frameworks but the one required, as if these people weren’t able to learn the specific language/framework they require.

Languages and framework differ on syntax, namings, paradigms, sometimes they’re extremely different from other common languages (such as (Lisp (parenthetic-hell)), or

.asciz "Assembly-x86_64"), but they all talk to the same computer logic under the hood. Once a dev becomes fluent in bitwise logic (or, even better, they become so fluent in talking with computers that they can say41 53 43 49 49 20 63 6f 64 65without tools, as if it were English), it’s just a matter of accustoming oneself to the specific syntax and naming conventions from a given language.Back when I was enrolled in college, I lost count of how many colleagues struggled with the entire course as soon as they were faced by Data Structure classes, binary trees, linked lists, queues, stacks… And Linear Programming, maximization and minimization, data fitness… To the majority of my colleagues, those classes were painful, especially because the teachers were somewhat rigid.

And this sentiment echoes across the companies and corps. Corps (especially the wannabe-programmer managers) don’t want to deal with computers, they want to deal with consumers and their sweet money, but a civil engineer and their masons can’t possibly build a house without willing to deal with a blueprint and the physics of building materials. This is part of the root of this whole problem.

An LLM can generate code like an intern getting ahead of their skis. If you let it generate enough code, it will do some gnarly stuff.

Another facet is the nature of mistakes it makes. After years of reviewing human code, I have this tendency to take some things for granted, certain sorts of things a human would just obviously get right and I tend not to think about it. AI mistakes are frequently in areas my brain has learned to gloss over and take on faith that the developer probably didn’t screw that part up.

AI generally generates the same sorts of code that I hate to encounter when humans write, and debugging it is a slog. Lots of repeated code, not well factored. You would assume of the same exact thing is fine in many places, you’d have a common function with common behavior, but no, AI repeated itself and didn’t always get consistent behavior out of identical requirements.

His statement is perhaps an over simplification, but I get it. Fixing code like that is sometimes more trouble than just doing it yourself from the onset.

Now I can see the value in generating code in digestible pieces, discarding when the LLM gets oddly verbose for simple function, or when it gets it wrong, or if you can tell by looking you’d hate to debug that code. But the code generation can just be a huge mess and if you did a large project exclusively through prompting, I could see the end result being just a hopeless mess.v frankly surprised he could even declare an initial “success”, but it was probably “tutorial ware” which would be ripe fodder for the code generators.

Given the stochastic nature of LLMs and the pseudo-darwinian nature of their training process, I sometimes wonder if geneticists wouldn’t be more suited to interpreting LLM output than programmers.

@Jayjader@jlai.lu @technology@lemmy.world

Given how it’s very akin to dynamic and chaotic systems (e.g. double pendulum, whose initial position, mass and length rules the movement of the pendulum, very similar to how the initial seed and input rule the output of generative AIs) due to the insurmountable amount of physically intertwined factors and the possibility of generalizing the system in mathematical, differential terms, I’d say that the more fit would be a physicist. Or a mathematician. lol

As always, relevant xkcd: https://xkcd.com/435/

I cannot understand and debug code written by AI. But I also cannot understand and debug code written by me.

Let’s just call it even.

AI is really great for small apps. I’ve saved so many hours over weekends that would otherwise be spent coding a small thing I need a few times whereas now I can get an AI to spit it out for me.

But anything big and it’s fucking stupid, it cannot track large projects at all.

What kind of small things have you vibed out that you needed?

I’m curious about that too since you can “create” most small applications with a few lines of Bash, pipes, and all the available tools on Linux.

Maybe they don’t run Linux. 🤭

Perverts!

FWIW that’s a good question but IMHO the better question is :

What kind of small things have you vibed out that you needed that didn’t actually exist or at least you couldn’t find after a 5min search on open source forges like CodeBerg, Gitblab, Github, etc?

Because making something quick that kind of works is nice… but why even do so in the first place if it’s already out there, maybe maintained but at least tested?

Since you put such emphasis on “better”: I’d still like to have an answer to the one I posed.

Yours would be a reasonable follow-up question if we noticed that their vibed projects are utilities already available in the ecosystem. 👍

Sure, you’re right, I just worry (maybe needlessly) about people re-inventing the wheel because it’s “easier” than searching without properly understand the cost of the entire process.

Very valid!

What if I can find it but it’s either shit or bloated for my needs?

Open an issue to explain why it’s not enough for you? If you can make a PR for it that actually implements the things you need, do it?

My point to say everything is already out there and perfectly fits your need, only that a LOT is already out there. If all re-invent the wheel in our own corner it’s basically impossible to learn from each other.

These are the principles I follow:

https://indieweb.org/make_what_you_need

https://indieweb.org/use_what_you_make

I don’t have time to argue with FOSS creators to get my stuff in their projects, nor do I have the energy to maintain a personal fork of someone else’s work.

It’s much faster for me to start up Claude and code a very bespoke system just for my needs.

I don’t like web UIs nor do I want to run stuff in a Docker container. I just want a scriptable CLI application.

Like I just did a subtitle translation tool in 2-3 nights that produces much better quality than any of the ready made solutions I found on GitHub. One of which was an *arr stack web monstrosity and the other was a GUI application.

Neither did what I needed in the level of quality I want, so I made my own. One I can automate like I want and have running on my own server.

So the claim is it’s easier to Claudge a whole new app than to make a personal fork of one that works? Sounds unlikely.

Depends on the “app”.

A full ass Lemmy client? Nope.

A subtitle translator or a RSS feed hydrator or a similar single task “app”? Easily and I’ve done it many times already.

I don’t have time to argue with FOSS creators to get my stuff in their projects

So much this. Over the years I have found various issues in FOSS and “done the right thing” submitting patches formatted just so into their own peculiar tracking systems according to all their own peculiar style and traditions, only to have the patches rejected for all kinds of arbitrary reasons - to which I say: “fine, I don’t really want our commercial competitors to have this anyway, I was just trying to be a good citizen in the community. I’ve done my part, you just go on publishing buggy junk - that’s fine.”

And if the maintainer doesn’t agree to merge your changes, what to you do then?

You have to build your own project, where you get to decide what gets added and what doesn’t.

I built a MAL clone using AI, nearly 700 commits of AI. Obviously I was responsible for the quality of the output and reviewing and testing that it all works as expected, and leading it in the right direction when going down the wrong path, but it wrote all of the code for me.

There are other MAL clones out there, but none of them do everything I wanted, so that’s why I built my own project. It started off as an inside joke with a friend, and eventually materialized as an actual production-ready project. It’s limited more by design of the fact that it relies on database imports and delta edits rather than the fact that it was written by AI, because that’s just the nature of how data for these types of things tend to work.

So if it can be vibe coded, it’s pretty much certainly already a “thing”, but with some awkwardness.

Maybe what you need is a combination of two utilities, maybe the interface is very awkward for your use case, maybe you have to make a tiny compromise because it doesn’t quite match.

Maybe you want a little utility to do stuff with media. Now you could navigate your way through ffmpeg and mkvextract, which together handles what you want, with some scripting to keep you from having to remember the specific way to do things in the myriad of stuff those utilities do. An LLM could probably knock that script out for you quickly without having to delve too deeply into the documentation for the projects.

If I understand correctly then this means mostly adapting the interface?

It’s certainly a use case that LLM has a decent shot at.

Of course, having said that I gave it a spin with Gemini 3 and it just hallucinated a bunch of crap that doesn’t exist instead of properly identifying capable libraries or frontending media tools…

But in principle and upon occasion it can take care of little convenience utilities/functions like that. I continue to have no idea though why some people seem to claim to be able to ‘vibe code’ up anything of significance, even as I thought I was giving it an easy hit it completely screwed it up…

Not OP but I made a little menu thing for launching VMs and a script for grabbing trailers for downloaded movies that reads the name of the folder, finds the trailer and uses yt-dlp to grab it, puts it in the folder and renames it.

Definitely sounds like a tiny shell script but yeah, I guess it’s seconds with an agent rather than a few minutes with manual coding 👍

Yeah pretty much! TBH for the first one there are already things online that can do that, I just wanted to test how the AI would do so I gave it a simple thing, it worked well and so I kept using it. The second one I wasn’t sure about because it’s a bit copyright-y, but yeah like you say it was just quicker. I wouldn’t use the AI for anything super important, but I figured it’d do for a quick little script that only needs to do one specific thing just for me.

I would need to inspect every line of that shit before using it. I’d be too scared that it would delete my entire library, like that dude who got their entire drive erased by Google Antigravity…

Yeah that’s fair. And mine were pretty small scripts so easy enough to check, and I keep proper backups and whatnot so no big deal. But like I say I wouldn’t use it for anything big or important.

I made a tool to hook into a sites api and get me data each week.

Would’ve taken me a few hours to make the gui and learn the api/libraries, took Gemini 10 minutes with me only needing to give it two error outputs.

Neat, that’s cool.

I don’t really agree, I think that’s kind of a problem with approaching it. I’ve built some pretty large projects with AI, but the thing is, you have to approach it the same way you should be approaching larger projects to begin with - you need to break it down into smaller steps/parts.

You don’t tell it “build me an entire project that does X, Y, Z, and A, B, C”, you have to tackle it one part at a time.

Just sell it to AI customers for AI cash.

I think this kinda points to why AI is pretty decent for short videos, photos, and texts. It produces outputs that one applies meaning to, and humans are meaning making animals. A computer can’t overlook or rationalize a coding error the same way.

so the obvious solution is to just have humans execute our code manually. Grab a pen and some crayons, go through it step by step and write variable values on the paper and draw the interface with the crayons and show it on a webcam or something. And they can fill in the gaps with what they think the code in question is supposed to do. easy!

I do a lot with AI but it is not good enough to replace humans, not even close. It repeats the same mistakes after you tell it no, it doesn’t remember things from 3 messages ago when it should. You have to keep re-explaining the goal to it. It’s wholey incompetant. And yea when you have it do stuff you aren’t familiar with or don’t create, def. I have it write a commentary, or I take the time out right then to ask it what x or y does then I add a comment.

Even worse, the ones I’ve evaluated (like Claude) constantly fail to even compile because, for example, they mix usages of different SDK versions. When instructed to use version 3 of some package, it will add the right version as a dependency but then still code with missing or deprecated APIs from the previous version that are obviously unavailable.

More time (and money, and electricity) is wasted trying to prompt it towards correct code than simply writing it yourself and then at the end of the day you have a smoking turd that no one even understands.

LLMs are a dead end.

constantly fail to even compile because, for example, they mix usages of different SDK versions

Try an agentic tool like Claude Code - it closes the loop by testing the compilation for you, and fixing its mistakes (like human programmers do) before bothering you for another prompt. I was where you are at 6 months ago, the tools have improved dramatically since then.

From TFS > I needed to make a small change and realized I wasn’t confident I could do it. My own product, built under my direction, and I’d lost confidence in my ability to modify it.

That sounds like a “fractional CTO problem” to me (IMO a fractional CTO is a guy who convinces several small companies that he’s a brilliant tech genius who will help them make their important tech decisions without actually paying full-time attention to any of them. Actual tech experience: optional.)

If you have lost confidence in your ability to modify your own creation, that’s not a tools problem - you are the tool, that’s a you problem. It doesn’t matter if you’re using an LLM coding tool, or a team of human developers, or a pack of monkeys to code your applications, if you don’t document and test and formally develop an “understanding” of your product that not only you but all stakeholders can grasp to the extent they need to, you’re just letting the development run wild - lacking a formal software development process maturity. LLMs can do that faster than a pack of monkeys, or a bunch of kids you hired off Craigslist, but it’s the exact same problem no matter how you slice it.

If you mean I have to install Claude’s software on my own computer, no thanks.

Just ask the ai to make the change?

AI isn’t good at changing code, or really even understanding it… It’s good at writing it, ideally 50-250 lines at a time

I’m just not following the mindset of “get ai to code your whole program” and then have real people maintain it? Sounds counter productive

I think you need to make your code for an Ai to maintain. Use Static code analysers like SonarQube to ensure that the code is maintainable (cognitive complexity)!and that functions are small and well defined as you write it.

I don’t think we should be having the AI write the program in the first place. I think we’re barreling towards a place where remotely complicated software becomes a lost technology

I don’t mind if AI helps here and there, I certainly use it. But it’s not good at custom fit solutions, and the world currently runs on custom fit solutions

AI is like no code solutions. Yeah, it’s powerful, easier to learn and you can do a lot with it… But eventually you will hit a limit. You’ll need to do something the system can’t do, or something you can’t make the system do because no one properly understands what you’ve built

At the end of the day, coding is a skill. If no one is building the required experience to work with complex systems, we’re going to be swimming in a world of endless ocean of vibe coded legacy apps in a decade

I just don’t buy that AI will be able to take something like a set of State regulations and build a complaint outcome. Most of our base digital infrastructure is like that, or it uses obscure ancient systems that LLMs are basically allergic to working with

To me, we’re risking everything on achieving AGI (and using it responsibly) before we run out of skilled workers, and we’re several game changing breakthroughs from achieving that

It’s good at writing it, ideally 50-250 lines at a time

I find Claude Sonnet 4.5 to be good up to 800 lines at a chunk. If you structure your project into 800ish line chunks with well defined interfaces you can get 8 to 10 chunks working cooperatively pretty easily. Beyond about 2000 lines in a chunk, if it’s not well defined, yeah - the hallucinations start to become seriously problematic.

The new Opus 4.5 may have a higher complexity limit, I haven’t really worked with it enough to characterize… I do find Opus 4.5 to get much slower than Sonnet 4.5 was for similar problems.

Okay, but if it’s writing 800 lines at once, it’s making design choices. Which is all well and good for a one off, but it will make those choices, make them a different way each time, and it will name everything in a very generic or very eccentric way

The AI can’t remember how it did it, or how it does things. You can do a lot… Even stuff that hasn’t entered commercial products like vectorized data stores to catalog and remind the LLM of key details when appropriate

2000 lines is nothing. My main project is well over a million lines, and the original author and I have to meet up to discuss how things flow through the system before changing it to meet the latest needs

But we can and do it to meet the needs of the customer, with high stakes, because we wrote it. These days we use AI to do grunt work, we have junior devs who do smaller tweaks.

If an AI is writing code a thousand lines at a time, no one knows how it works. The AI sure as hell doesn’t. If it’s 200 lines at a time, maybe we don’t know details, but the decisions and the flow were decided by a person who understands the full picture

but it will make those choices, make them a different way each time

That’s a bit of the power of the process: variety. If the implementation isn’t ideal, it can produce another one. In theory, it can produce ten different designs for any given solution then select the “best” one by whatever criteria you choose. If you’ve got the patience to spell it all out.

The AI can’t remember how it did it, or how it does things.

Neither can the vast majority of people after several years go by. That’s what the documentation is for.

2000 lines is nothing.

Yep. It’s also a huge chunk of example to work from and build on. If your designs are highly granular (in a good way), most modules could fit under 2000 lines.

My main project is well over a million lines

That’s should be a point of embarrassment, not pride. My sympathies if your business really is that complicated. You might ask an LLM to start chipping away at refactoring your code to collect similar functions together to reduce duplication.

But we can and do it to meet the needs of the customer, with high stakes, because we wrote it. These days we use AI to do grunt work, we have junior devs who do smaller tweaks.

Sure. If you look at bigger businesses, they are always striving to get rid of “indispensible duos” like you two. They’d rather pay 6 run-of-the-mill hire-more-any-day-of-the-week developers than two indispensibles. And that’s why a large number of management types who don’t really know how it works in the trenches are falling all over themselves trying to be the first to fly a team that “does it all with AI, better than the next guys.” We’re a long way from that being realistic. AI is a tool, you can use it for grunt work, you can use it for top level design, and everything in-between. What you can’t do is give it 25 words or less of instruction and expect to get back anything of significant complexity. That 2000 line limit becomes 1 million lines of code when every four lines of the root module describes another module.

If an AI is writing code a thousand lines at a time, no one knows how it works.

Far from it. Compared with code I get to review out of India, or Indiana, 2000 lines of AI code is just as readable as any 2000 lines I get out of my colleagues. Those colleagues also make the same annoying deviations from instructions that AI does, the biggest difference is that AI gets it’s wrong answer back to me within 5-10 minutes, Indiana? We’ve been correcting and recorrecting the same architectural implementation for the past 6 months. They had a full example in C++, they are going to “translate it to Rust” for us. I figured, it took me about 6 weeks total to develop the system from scratch, with a full example like they have they should be well on their way in 2 weeks. Yeah, nowhere in 2 weeks, so I do a Rust translation for them in the next two weeks, show them. O.K. we see that, but we have been tasked to change this aspect of the interface to something undefined, so we’re going to do an implementation with that undefined interface… and so I refine my Rust implementation to a highly polished example ready for any undefined interface you throw at it within another 2 weeks, and Indiana continues to hack away at three projects simultaneously, getting nowhere equally fast on all 3. It has been 7 months now, I’m still reviewing Indiana’s code and reminding them, like I did the AI, of all the things I have told them six times over the past 7 months that they keep drifting off from.

Holy shit, you’re a fucking retard. Like of the “people look and laugh” scale. I’m unironically going to take your response to share with technical people in my life to laugh over

And no hate to the mentally ill, I’ve never laughed at them. I laugh with them, because they’re delightful and love joy to an extent that leaves me jealous

But you’re not a real person. You’re a joke, if your ego was two sizes smaller I’d be gently explaining to you how no number of code katas would result in Microsoft XP

I’ve made full-ass changes on existing codebases with Claude

It’s a skill you can learn, pretty close to how you’d work with actual humans

What full ass changes have you made that can’t be done better with a refactoring tool?

I believe Claude will accept the task. I’ve been fixing edge cases in a vibe colleague’s full-ass change all month. Would have taken less time to just do it right the first time.

I just did three tasks purely with Claude - at work.

All were pretty much me pasting the Linear ticket to Claude and hitting go. One got some improvement ideas on the PR so I said “implement the comments from PR 420” and so it did.

These were all on a codebase I haven’t seen before.

The magic sauce is that I’ve been doing this for a quarter century and I’m pretty good at reading code and I know if something smells like shit code or not. I’m not just YOLOing the commits to a PR without reading first, but I save a ton of time when I don’t need to do the grunt work of passing a variable through 10 layers of enterprise code.

pretty close to how you’d work with actual humans

That has been my experience as well. It’s like working with humans who have extremely fast splinter skills, things they can rip through in 10 minutes that might take you days, weeks even. But then it also takes 5-10 minutes to do some things that you might accomplish in 20 seconds. And, like people, it’s not 100% reliable or accurate, so you need to use all those same processes we have developed to help people catch their mistakes.

I don’t know shit about anything, but it seems to me that the AI already thought it gave you the best answer, so going back to the problem for a proper answer is probably not going to work. But I’d try it anyway, because what do you have to lose?

Unless it gets pissed off at being questioned, and destroys the world. I’ve seen more than few movies about that.

You are in a way correct. If you keep sending the context of the “conversation” (in the same chat) it will reinforce its previous implementation.

The way ais remember stuff is that you just give it the entire thread of context together with your new question. It’s all just text in text out.

But once you start a new conversation (meaning you don’t give any previous chat history) it’s essentially a “new” ai which didn’t know anything about your project.

This will have a new random seed and if you ask that to look for mistakes etc it will happily tell you that the last Implementation was all wrong and here’s how to fix it.

It’s like a minecraft world, same seed will get you the same map every time. So with AIs it’s the same thing ish. start a new conversation or ask a different model (gpt, Google, Claude etc) and it will do things in a new way.

Doesn’t work. Any semi complex problem with multiple constraints and your team of AIs keeps running circles. Very frustrating if you know it can be done. But what if you’re a “fractional CTO” and you get actually contradictory constraints? We haven’t gotten yet to AIs who will tell you that what you ask is impossible.

Yeah right now you have to know what’s possible and nudge the ai in the right direction to use the correct approach according to you if you want it to do things in an optimized way

Maybe the solution is to keep sending the code through various AI requests, until it either gets polished up, or gains sentience, and destroys the world. 50-50 chance.

This stuff ALWAYS ends up destroying the world on TV.

Seriously, everybody is complaining about the quality of AI product, but the whole point is for this stuff to keep learning and improving. At this stage, we’re expecting a kindergartener to product the work of a Harvard professor. Obviously, were going to be disappointed.

But give that kindergartener time to learn and get better, and they’ll end up a Harvard professor, too. AI may just need time to grow up.

And frankly, that’s my biggest worry. If it can eventually start producing results that are equal or better than most humans, then the Sociopathic Oligarchs won’t need worker humans around, wasting money that could be in their bank accounts.

And we know what their solution to that problem will be.

I work in an company who is all-in on selling AI and we are trying desperately to use this AI ourselves. We’ve concluded internally that AI can only be trusted with small use cases that are easily validated by humans, or for fast prototyping work… hack day stuff to validate a possibility but not an actual high quality safe and scalable implementation, or in writing tests of existing code, to increase test coverage. yes, I know thats a bad idea but QA blessed the result… so um … cool.

The use case we zeroed in on is writing well schema’d configs in yaml or json. Even then, a good percentage of the time the AI will miss very significant mandatory sections, or add hallucinations that are unrelated to the task at hand. We then can use AI to test AI’s work, several times using several AIs. And to a degree, it’ll catch a lot of the issues, but not all. So we then code review and lint with code we wrote that AI never touched, and send all the erroring configs to a human. It does work, but cant be used for mission critical applications. And nothing about the AI or the process of using it is free. Its also disturbingly not idempotent. Did it fail? Run it again a few times and it’ll pass. We think it still saves money when done at scale, but not as much as we promise external AI consumers. The Senior leadership know its currently overhyped trash and pressure us to use it anyway on expectations it’ll improve in the future, so we give the mandatory crisp salute of alignment and we’re off.

I will say its great for writing yearly personnel reviews. It adds nonsense and doesnt get the whole review correct, but it writes very flowery stuff so managers dont have to. So we use it for first drafts and then remove a lot of the true BS out of it. If it gets stuff wrong, oh well, human perception is flawed.

This is our shared future. One of the biggest use cases identified for the industry is health care. Because its hard to assign blame on errors when AI gets it wrong, and AI will do whatever the insurance middle men tell it to do.

I think we desperately need a law saying no AI use in health care decisions, before its too late. This half-assed tech is 100% going to kill a lot of sick people.

At work there’s a lot of rituals where processes demand that people write long internal documents that no one will read, but management will at least open it up, scroll and be happy to see such long documents with credible looking diagrams, but never read them, maybe looking at a sentence or two they don’t know, but nod sagely at.

LLM can generate such documents just fine.

Incidentally an email went out to salespeople. It told them they didn’t need to know how to code or even have technical skills, they code just use Gemini 3 to code up whatever a client wants and then sell it to them. I can’t imagine the mind that thinks that would be a viable business strategy, even if it worked that well.

fantastic for pumping a bubble though, to idiots with more $ than sense

Yeah, this one is going to hurt. I’m pretty sure my rather long career will be toast as my company and mostly my network of opportunities are all companies that are bought so hard into the AI hype that I don’t know that they will be able to survive that going away.

if you don’t mind compromising your morales somewhat and have moderate understanding of how the stock

marketcasino works…loads of $ to be made when pops, atleastYeah, but mispredicting that would hurt. The market can stay irrational longer than I can stay solvent, as they say.

eh, not if you know how it works. basic hedging and not shorting stuff limits your risk significantly.

especially in a bull market where ratfucking and general fraud is out in thebopen for all to see

AI is hot garbage and anyone using it is a skillless hack. This will never not be true.

Wait so I should just be manually folding all these proteins?

Do you not know the difference between an automated process and machine learning?

Yes? Machine learning has been huge for protein folding and not because anyone is stupid, it’s because it’s a task uniquely suited for machine learning, of which there are many. But none of that is what this AI bubble is really about, and even though I find the underlining math and technology fascinating, I share the disdain for how the bulk of it is currently being used.

The thing with being cocky is, if you are wrong it makes you look like an even bigger asshole

https://en.wikipedia.org/wiki/AlphaFold

The program uses a form of attention network, a deep learning technique that focuses on having the AI identify parts of a larger problem, then piece it together to obtain the overall solution.

Cool, now do an environmental impact on it.

Cool, now do an environmental impact on the data centre hosting your instance while you pollute by mindlessly talking shit on the Internet.

I’ll take AI unfolding proteins over you posting any day.

Hilarious. You’re comparing a lemmy instance to AI data centers. There’s the proof I needed that you have no fucking clue what you’re talking about.

“bUt mUh fOLdeD pRoTEinS,” said the AI minion.