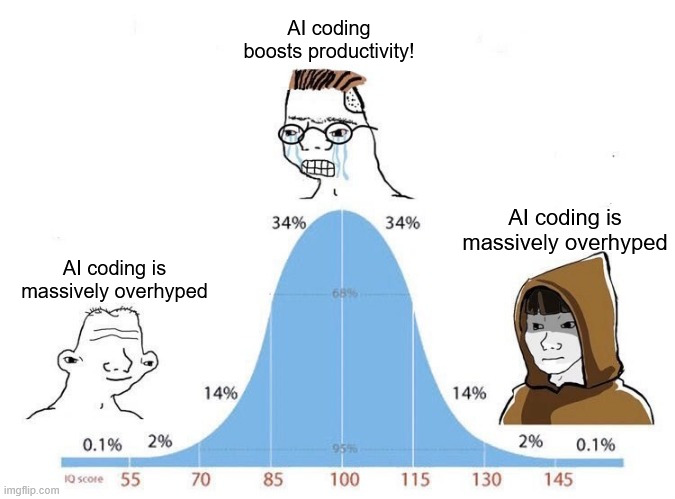

“No Duh,” say senior developers everywhere.

The article explains that vibe code often is close, but not quite, functional, requiring developers to go in and find where the problems are - resulting in a net slowdown of development rather than productivity gains.

Senior Management in much of Corporate America is like a kind of modern Nobility in which looking and sounding the part is more important than strong competence in the field. It’s why buzzwords catch like wildfire.

For most large projects, writing the code is the easy part anyway.

This article sums up a Stanford study of AI and developer productivity. TL;DR - net productivity boost is a modest 15-20%, or as low as negative to 10% in complex, brownfield codebases. This tracks with my own experience as a dev.

https://www.linkedin.com/pulse/does-ai-actually-boost-developer-productivity-striking-çelebi-tcp8f

I work adjacent to software developers, and I have been hearing a lot of the same sentiments. What I don’t understand, though, is the magnitude of this bubble then.

Typically, bubbles seem to form around some new market phenomenon or technology that threatens to upset the old paradigm and usher in a new boom. Those market phenomena then eventually take their place in the world based on their real value, which is nowhere near the level of the hype, but still substantial.

In this case, I am struggling to find examples of the real benefits of a lot of these AI assistant technologies. I know that there are a lot of successes in the AI realm, but not a single one I know of involves an LLM.

So, I guess my question is, “What specific LLM tools are generating profits or productivity at a substantial level well exceeding their operating costs?” If there really are none, or if the gains are only incremental, then my question becomes an incredulous, “Is this biggest in history tech bubble really composed entirely of unfounded hype?”

From what I’ve seen and heard, there are a few factors to this.

One is that the tech industry right now is built on venture capital. In order to survive, they need to act like they’re at the forefront of the Next Big Thing in order to keep bringing investment money in.

Another is that LLMs are uniquely suited to extending the honeymoon period.

The initial impression you get from an LLM chatbot is significant. This is a chatbot that actually talks like a person. A VC mogul sitting down to have a conversation with ChatGPT, when it was new, was a mind-blowing experience. This is a computer program that, at first blush, appears to be able to do most things humans can do, as long as those things primarily consist of reading things and typing things out - which a VC, and mid/upper management, does a lot of. This gives the impression that AI is capable of automating a lot of things that previously needed a live, thinking person - which means a lot of savings for companies who can shed expensive knowledge workers.

The problem is that the limits of LLMs are STILL poorly understood by most people. Despite constructing huge data centers and gobbling up vast amounts of electricity, LLMs still are bad at actually being reliable. This makes LLMs worse at practically any knowledge work than the lowest, greenest intern - because at least the intern can be taught to say they don’t know something instead of feeding you BS.

It was also assumed that bigger, hungrier LLMs would provide better results. Although they do, the gains are getting harder and harder to reach. There needs to be an efficiency breakthrough (and a training breakthrough) before the wonderful world of AI can actually come to pass because as it stands, prompts are still getting more expensive to run for higher-quality results. It took a while to make that discovery, so the hype train was able to continue to build steam for the last couple years.

Now, tech companies are doing their level best to hide these shortcomings from their customers (and possibly even themselves). The longer they keep the wool over everyone’s eyes, the more money continues to roll in. So, the bubble keeps building.

The upshot of this and a lot of the other replies I see here and elsewhere seem to suggest that one big difference between this bubble and other past ones is that with this most recent one, there is so much of the global economy now tied to the fate of this bubble that the entire financial world is colluding to delay the inevitable due to the expected severity of the consequences.

I’m not a programmer in any sense. Recently, I made a project where I used python and raspberry pi and had to train some small models on a KITTI data set. I used AI to write the broad structure of the code, but in the end, it took me a lot of time going through python documentation as well as the documentation of the specific tools/modules I used to actually get the code working. Would an experienced programmer get the same work done in an afternoon? Probably. But the code AI output still had a lot of flaws. Someone who knows more than me would probably input better prompts and better follow up requirements and probably get a better structure from the AI, but I doubt they’ll get a complete code. In the end, even to use AI, you have to know what you’re doing to use AI efficiently and you still have to polish the code into something that actually works.

From my experience, AI just seems to be a lesson in overfitment. You can’t use it to do things nobody has done before. Furthermore, you only really get good responses from prompts related to Javascript

Writing apps with AI seems pretty cooked. But I’ve had some great successes using GitHub copilot for some annoying scripting work.

AI is works well for mindless tasks. Data formatting, rough drafts, etc.

Once a task requires context and abstract thinking, AI can’t handle it.

Eh, I don’t know. As long as you can break it down into smaller sub-tasks, AI can do some complex stuff. Just have to figure out where the line is. I’ve nudged it along into reading multiple LENGTHY API documentation pages and written some fairly complex scripting logic.

Trusting it to have not fucked with your data while formatting it is pretty bold.

I think maybe a good comparison is to written papers/ assignments. It can generate those just like it can generate code.

But it is not about the words themself, but about the content.

deleted by creator

These types of articles always fail to mention how well trained the developers were on techniques and tools. In my experience that makes a big difference.

My employer mandates we use AI and provides us with any model, IDE, service we ask for. But where it falls short is providing training or direction on ways to use it. Most developers seem to go for results prompting and get a terrible experience.

I on the other hand provide a lot of context through documents and various mcp tooling, I talk about the existing patterns in the codebase and provide sources to other repositories as examples, then we come up with an implementation plan and execute on it with a task log to stay on track. I spend very little time fixing bad code because I spent the setup time nailing down context.

So if a developer is just prompting “Do XYZ”. It’s no wonder they’re spending more time untangling a random mess.

Another aspect is that everyone seems to always be working under the gun and they just don’t have the time to figure out all the best practices and techniques on their own.

I think this should be considered when we hear things like this.

I have 3 questions, and I’m coming from a heavily AI-skeptic position, but am open:

-

Do you believe that providing all that context, describing the existing patterns, creating an implementation plan, etc, allows the AI to both write better code and faster than if you just did it yourself? To me, this just seems like you have to re-write your technical documentation in prose each time you want to do something. You are saying this is better than ‘Do XYZ’, but how much twiddling of your existing codebase do you need to do before an AI can understand the business context of it? I don’t currently do development on an existing codebase, but every time I try to get these tools to do something fairly simple from scratch, they just flail. Maybe I’m just not spending the hours to build my AI-parsable functional spec. Every time I’ve tried this, asking something as simple as (and paraphrased for brevity) “write an Asteroids clone using JavaScript and HTML 5 Canvas” results in a full failure, even with multiple retries chasing errors. I wrote something like that a few years ago to learn Javascript and it took me a day-ish to get something that mostly worked.

-

Speaking of that context. Are you running your models locally, or do you have some cloud service? If you give your entire codebase to a 3rd party as context, how much of your company’s secret sauce have you disclosed? I’d imagine most sane companies are doing something to make their models local, but we see regular news articles about how ChatGPT is training on user input and leaking sensitive data if you ask it nicely and I can’t imagine all the pro-AI CEOs are aware of the risks here.

-

How much pen-testing time are you spending on this code, error handling, edge cases, race conditions, data sanitation? An experienced dev understands these things innately, having fixed these kinds of issues in the past and knows the anti-patterns and how to avoid them. In all seriousness, I think this is going to be the thing that actually kills AI vibe coding, but it won’t be fast enough. There will be tons of new exploits in what used to be solidly safe places. Your new web front-end? It has a really simple SQL injection attack. Your phone app? You can tell it your username is admin’joe@google.com and it’ll let you order stuff for free since you’re an admin.

I see a place for AI-generated code, for instant functions that do something blending simple and complex. “Hey claude, write a function to take a string and split it at the end of every sentence containing an uppercase A”. I had to write weird functions like that constantly as a sysadmin, and transforming data seems like a thing an AI could help me accelerate. I just don’t see that working on a larger scale, though, or trusting an AI enough to allow it to integrate a new function like that into an existing codebase.

Thank you for reading my comment. I’m on the train headed to work and I’ll try to answer completely. I love talking about this stuff.

Do you believe that providing all that context, describing the existing patterns, creating an implementation plan, etc, allows the AI to both write better code and faster than if you just did it yourself?

For my work, absolutely. My work is a lot of tickets that were setup from multiple stories and multiple epics. It would be like asking me if I am really framing a house faster with a nail gun and compressor. If I were just hanging up a picture or a few pictures in the hallway, it’s probably faster to use a hammer than to set up the compressor and nail gun, plus cleanup.

However, a lot of that documentation already exists by the time it gets to me. All of the Design Documents and Product Requirement Documents have already been formed, discussed, and approved by our architecture team and team leads. Imagine if you already had this documentation for the asteroid game; how much better do you think your LLM would do? Maybe this is the benefit of using LLMs for development at an established company. Btw, a lot of those Documents were also created with the assistance of AI by the Product Team, Architects, and Principle/Staff/Leads anyway.

how much twiddling of your existing codebase do you need to do before an AI can understand the business context of it?

With the help of our existing documents and codebase(s) I feel I dont have any issues with the model knowing what we’re doing. I do have to set up my own context for how I want it to be done. To me this is like explaining to a Junior Engineer what I need them to help me with. If you’re familiar with “Know when to Direct, when to Delegate, or when to Develop” I would say it lands in between Direct and Delegate. I have markdown files with my rules and guidelines and provide that as context. I use Augment Code which is pretty good with codebase context.

write an Asteroids clone using JavaScript and HTML 5 Canvas

I would try “Let’s plan out the steps needed to write an Asteroids game using JavaScript and HTML 5. Identify and explain each step of the development plan. The game must build with no errors, be playable, and pass all tests. Do not wrote code at this time until our plan is approved” Then once it comes back with the initial steps, I would guide it further if needed. Finally I would approve the plan and tell it to execute while tracking it’s steps (Augment Code uses a task log).

Are you running your models locally, or do you have some cloud service? If you give your entire codebase to a 3rd party as context, how much of your company’s secret sauce have you disclosed?

We are required to use the frontier models that my employer has contracts with and are forbidden from using local models. In our enterprise contracts we have negotiated for no training on our data. I imagine we pay for that. I’m not involved in that level of interaction on the accounts.

How much pen-testing time are you spending on this code, error handling, edge cases, race conditions, data sanitation? An experienced dev understands these things innately, having fixed these kinds of issues in the past and knows the anti-patterns and how to avoid them. In all seriousness, I think this is going to be the thing that actually kills AI vibe coding, but it won’t be fast enough. There will be tons of new exploits in what used to be solidly safe places.

We have other teams that handle a lot of these tasks. These teams are also using AI tools to get the job done. In addition, we have static testing tools on our repo like CodeRabbit and another one I can’t remember the name of that looks specifically for security concerns. It will comment on the PR directly and our merge would be blocked until handled. Code coverage for testing is at 85% or it blocks the merge and we have a full QA department of Analysts and SDETs to QA. In addition to that we still have human approvals required (2 devs + Sr+). All of these people involved are still using AI tools to help them in each step.

I hope that answers your questions and gives you some insight into how I’ve found success in my experience with it. I will say that on my personal projects I don’t go this far with process and I don’t experience the same AI output that I do at work.

Thanks for your reply, and I can still see how it might work.

I’m curious if you have any resources that do some end-to-end examples. This is where I struggle. If I have an atomic piece of code I need and I can maybe get it started with a LLM and finish it by hand, but anything larger seems to just always fail. So far the best video I found to try a start-to-finish demo was this: https://www.youtube.com/watch?v=8AWEPx5cHWQ

He spends plenty of time describing the tools and how to use them, but when we get to the actual work, we spend 20 minutes telling the LLM that it’s doing stuff wrong. There’s eventually a prototype, but to get there he had to alternate between ‘I still can’t jump’ and ‘here’s the new error.’ He eventually modified code himself, so even getting a ‘mario clone’ running requires an actual developer and the final result was underwhelming at best.

For me, a ‘game’ is this tiny product that could be a viable unit. It doesn’t need to talk to other services, it just needs to react to user input. I want to see a speed-run of someone using LLMs to make a game that is playable. It doesn’t need to be “fun”, but the video above only got to the ‘player can jump and gets game over if hitting enemy’ stage. How much extra effort would it take to make the background not flat blue? Is there a win condition? How to refactor this so that the level is not hard-coded? Multiple enemy types? Shoot a fireball that bounces? Power Ups? And does doing any of those break jump functionality again? How much time do I have to spend telling the LLM that the fireball still goes through the floor and doesn’t kill an enemy when it hits them?

I could imagine that if the LLM was handed a well described design document and technical spec that it could do better, but I have yet to see that demonstrated. Given what it produces for people publishing tutorials online, I would never let it handle anything business critical.

The video is an hour long, and spends about 20 minutes in the middle actually working on the project. I probably couldn’t do better, but I’ve mostly forgotten my javascript and HTML canvas. If kaboom.js was my focus, though, I imagine I could knock out what he did in well under 20 minutes and have a better architected design that handled the above questions.

I’ve, luckily, not yet been mandated that I embed AI into my pseudo-developer role, but they are asking.

-

I would say absolutely in the general sense nost people, and the salesmen, frame them in.

When I was invited to assist with the GDC development, I got a chance to partner with a few AI developers and see the development process firsthand, try my hand at it myself, and get my hands on a few low parameter models for my own personal use. It’s really interesting just how capable some models are in their specific use-cases. However, even high param. models easily become useless at the drop of a hat.

I found the best case, one that’s rarely done mind you, is integrate the model into a program that has the ability to call a known database. With a properly trained model to format output in both natural language and use a given database for context calls, and concrete information, the qualitative performance leaps ahead by bounds. Problem is, that requires so much customization it pretty much ends up being something a capable hobbyist would do, it’s just not economically sound for a business to adopt.

Even though this shit was apparent from day fucking 1, at least the Tech Billionaires were able to cause mass layoffs, destroy an entire generation of new programmers’ careers, introduce an endless amount of tech debt and security vulnerabilities, all while grifting investors/businesses and making billions off of all of it.

Sad excuses for sacks of shit, all of them.

“No Duh,” say senior developers everywhere.

I’m so glad this was your first line in the post

If not to editorialize, what else is the text box for? :)

No duh, says a layman who never wrote code in his life.

Thing is both statements can be true.

Used appropriately and in the right context, LLMs can accelerate some select work.

But the hype level is ‘human replacement is here (or imminent, depending on if the company thinks the audience is willing to believe yet or not)’. Recently Anthropic suggested someone could just type ‘make a slack clone’ and it’ll all be done and perfect.

Might be there someday, but right now it’s basically a substitute for me googling some shit.

If I let it go ham, and code everything, it mutates into insanity in a very short period of time.

I’m honestly doubting it will get there someday, at least with the current use of LLMs. There just isn’t true comprehension in them, no space for consideration in any novel dimension. If it takes incredible resources for companies to achieve sometimes-kinda-not-dogshit, I think we might need a new paradigm.

They are statistical prediction machines. The more they output, the larger the portion of their “context window” (statistical prior) becomes the very output they generated. It’s a fundamental property of the current LLM design that the snake will eventually eat enough of it’s tail to puke garbage code.

A crazy number of devs weren’t even using EXISTING code assistant tooling.

Enterprise grade IDEs already had tons of tooling to generate classes and perform refactoring in a sane and algorithmic way. In a way that was deterministic.

So many use cases people have tried to sell me on (boilerplate handling) and im like “you have that now and don’t even use it!”.

I think there is probably a way to use llms to try and extract intention and then call real dependable tools to actually perform the actions. This cult of purity where the llm must actually be generating the tokens themselves… why?

I’m all for coding tools. I love them. They have to actually work though. Paradigm is completely wrong right now. I don’t need it to “appear” good, i need it to BE good.

Exactly. We’re already bootstrapping, re-tooling, and improving the entire process of development to the best of our collective ability. Constantly. All through good, old fashioned, classical system design.

Like you said, a lot of people don’t even put that to use, and they remain very effective. Yet a tiny speck of AI tech and its marketing is convincing people we’re about to either become gods or be usurped.

It’s like we took decades of technical knowledge and abstraction from our Computing Canon and said “What if we didn’t use that anymore?”

This is the smoking gun. If the AI hype boys really were getting that “10x engineer” out of AI agents, then regular developers would not be able to even come close to competing. Where are these 10x engineers? What have they made? They should be able to spin up whole new companies, with whole new major software products. Where are they?

I think we’ve tapped most of the mileage we can get from the current science, the AI bros conveniently forget there have been multiple AI winters, I suspect we’ll see at least one more before “AGI” (if we ever get there).

Almost like its a desperate bid to blow another stock/asset bubble to keep ‘the economy’ going, from C suite, who all knew the housing bubble was going to pop when this all started, and now is.

Funniest thing in the world to me is high and mid level execs and managers who believe their own internal and external marketing.

The smarter people in the room realize their propoganda is in fact propogands, and are rolling their eyes internally that their henchmen are so stupid as to be true believers.

Glad someone paid a bunch of worthless McKinsey consultants what I could’ve told you myself

It is not worthless. My understanding is that management only trusts sources that are expensive.

Yep, going through that at work, they hired several consultant companies and near as I can tell, they just asked employees how the company was screwing up, we largely said the same things we always say to executives, they repeated them verbatim, and executives are now praising the insight on how to fix our business…